为提高效率,提问时请提供以下信息,问题描述清晰可优先响应。

- 【TiDB 版本】:5.7.25-TiDB-v4.0.0

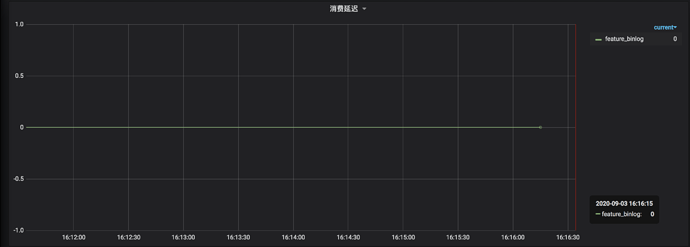

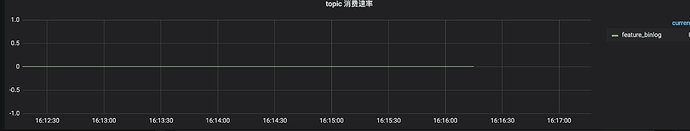

- 【问题描述】:如下图显示,所以我的binlog是开启了吗?文档中取值“ON”是开启,这0就懵了

MySQL [feature]> show variables like "log_bin";

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| log_bin | 0 |

+---------------+-------+

1 row in set (0.02 sec)

MySQL [feature]> select @@version;

+--------------------+

| @@version |

+--------------------+

| 5.7.25-TiDB-v4.0.0 |

+--------------------+

1 row in set (0.00 sec)

MySQL [feature]> show pump status;

+-------------------+-------------------+--------+--------------------+---------------------+

| NodeID | Address | State | Max_Commit_Ts | Update_Time |

+-------------------+-------------------+--------+--------------------+---------------------+

| 10.16.16.134:8250 | 10.16.16.134:8250 | online | 419198173690724353 | 2020-09-03 14:22:07 |

| 10.16.16.49:8250 | 10.16.16.49:8250 | online | 419198173087793153 | 2020-09-03 14:22:07 |

| 10.16.16.131:8250 | 10.16.16.131:8250 | online | 419198173743153153 | 2020-09-03 14:22:07 |

+-------------------+-------------------+--------+--------------------+---------------------+

3 rows in set (0.00 sec)

MySQL [feature]> show drainer status;

+------------------+------------------+--------+--------------------+---------------------+

| NodeID | Address | State | Max_Commit_Ts | Update_Time |

+------------------+------------------+--------+--------------------+---------------------+

| 10.16.16.49:8249 | 10.16.16.49:8249 | online | 419198175316017154 | 2020-09-03 14:22:17 |

+------------------+------------------+--------+--------------------+---------------------+

1 row in set (0.01 sec)