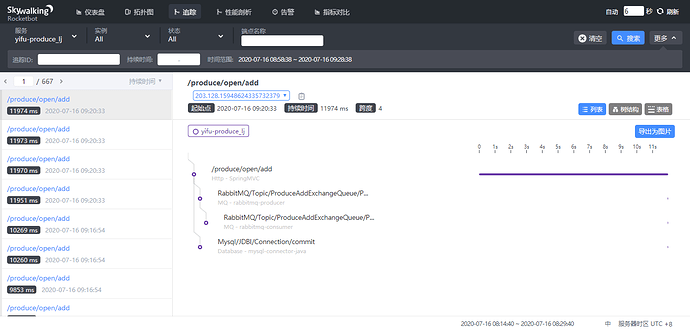

-

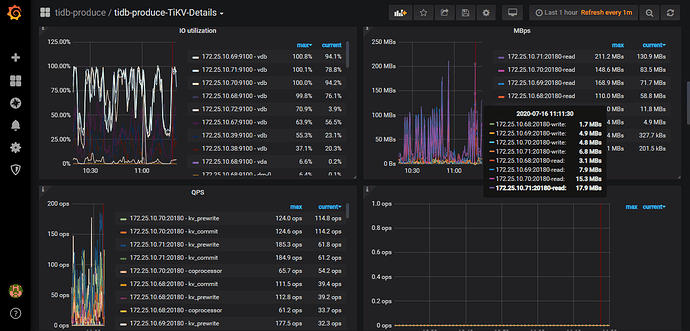

请反馈以下 rocksdb kv - key flow 和 read flow 监控再确认下是否是读流量大导致,

-

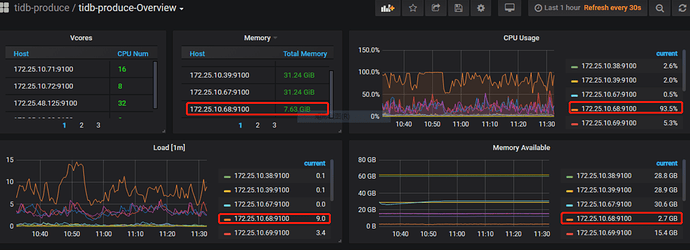

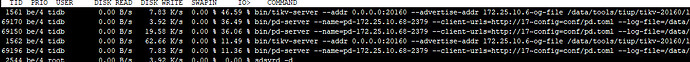

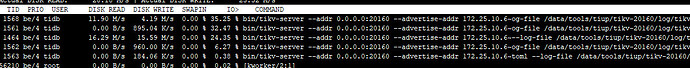

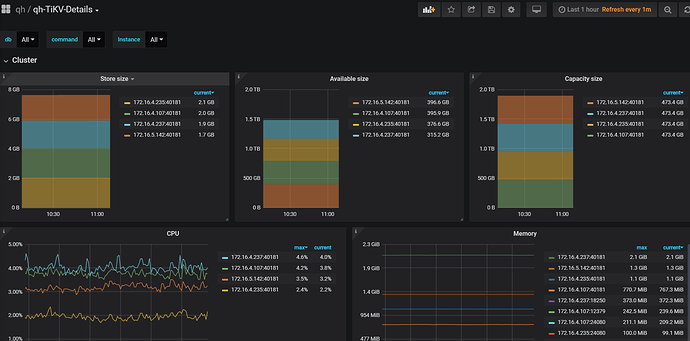

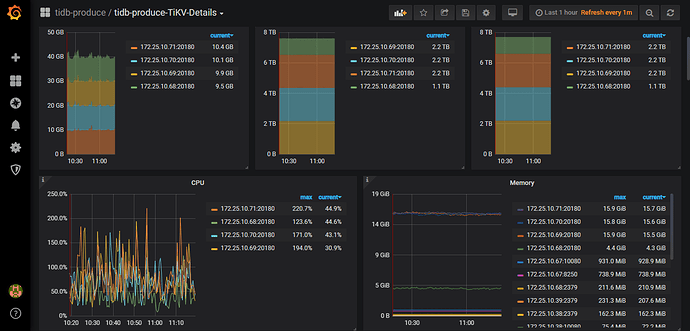

从监控中看到 rocksdb cpu 很高,猜测是磁盘的问题,请问该服务器上是否是纯 tikv 在运行呢?还是有其他服务在一起部署的,可以看下服务器资源,主要被什么服务占用

-

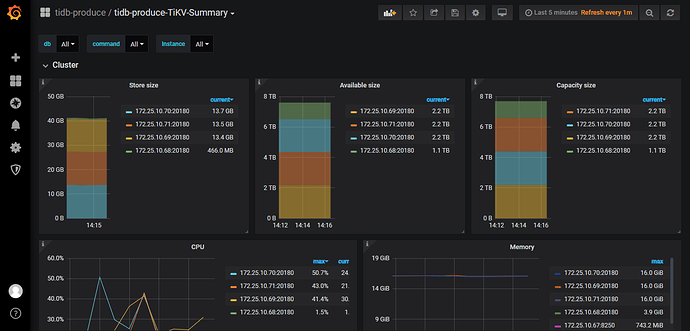

请看下当前 tikv 服务器的磁盘使用率是个什么情况,其可能会影响 pd 的调度,导致 io 升高。

10.68 的服务器貌似与其他服务器配置不是很相同,或者说是很低,他的 cpu 使用率也是一直很高,

68上装了 KV和PD,然后它的配置比另外几台KV的配置少了一半。

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 0.00 0.00 2.00 0.00 8.00 8.00 0.00 0.50 0.00 0.50 0.50 0.10

vdb 0.00 4.00 0.00 79.00 0.00 24336.50 616.11 14.18 91.18 0.00 91.18 9.62 76.00

dm-0 0.00 0.00 0.00 2.00 0.00 8.00 8.00 0.00 0.50 0.00 0.50 0.50 0.10

dm-1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

dm-2 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 0.00 0.00 1.00 0.00 4.00 8.00 0.00 0.00 0.00 0.00 0.00 0.00

vdb 0.00 1.00 1.00 242.00 32.00 48396.00 398.58 18.91 133.95 77.00 134.19 3.69 89.70

dm-0 0.00 0.00 0.00 1.00 0.00 4.00 8.00 0.00 0.00 0.00 0.00 0.00 0.00

dm-1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

dm-2 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

pd 和 tikv 不建议部署在同一台服务器上,两个服务对磁盘的要求都很高,目前是 4 个 tikv 可以将其缩容掉,留下三个 tikv,并且请保证剩余的三台 tikv 节点服务器配置完全相同。pd 可以与 tidb 部署在同一台服务器上,也可以将其继续保留。

请反馈下 tidb-detail cluster 界面看下

另外三台KV的配置是一样的。你是确定缩容那台KV有效果吗?还是不确定,因为生产环境也不敢乱动。

我这边感觉,实际的IO吞吐量比iostat监控上的小很多。你们Tidb上会做很多其他的io操作?

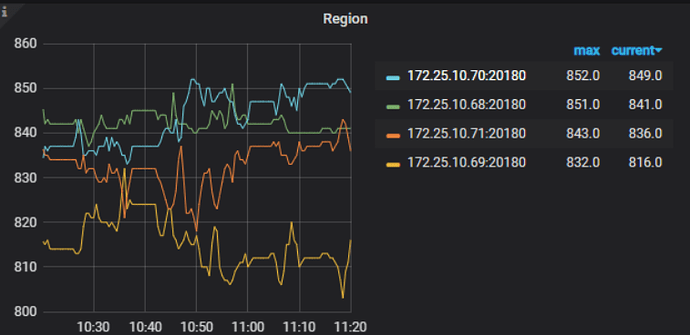

目前看 region 的调度还是很频繁的,与 68 的磁盘容量是有关系,68 的内存也很低,可能会更容易出现问题。

建议缩容,保证 tikv 节点的配置相同,否则容易出现木桶效应。

而且目前使用的是 hdd 盘,io 方面表现不是很好。68 上 pd 与 tikv 又是混合部署,集群规划不是很合理

hi,

缩容掉的 tikv 状态会从 UP -> offline -> tombstone(通过 pd-ctl sore 查看),并且会将其上面的 region 和 leader 全部迁移走,此过程会需要时间。

PS:可以帮忙反馈下 pd-ctl store / member 的信息

能详细写下命令不?对这些命令还不是很了解

你好,

部署方式不同,执行命令的形式不同,pd-ctl 也是运维 tidb 的重要手段,可以根据下面链接学习下。

tidb-ansible 部署

tidb-ansible/resources/bin/pd-ctl -u http://pdip:pdport store

tidb-ansible/resources/bin/pd-ctl -u http://pdip:pdport member

tiup 部署:

tiup ctl pd -u http://pdip:pdport member / store

Starting component ctl: /root/.tiup/components/ctl/v4.0.1/ctl pd -u http://172.25.10.68:2379 member / store

{

“header”: {

“cluster_id”: 6837284552608463547

},

“members”: [

{

“name”: “pd-172.25.10.39-2379”,

“member_id”: 2581109303334301696,

“peer_urls”: [

“http://172.25.10.39:2380”

],

“client_urls”: [

“http://172.25.10.39:2379”

],

“deploy_path”: “/data/tools/tiup/pd-2379/bin”,

“binary_version”: “v4.0.0”,

“git_hash”: “56d4c3d2237f5bf6fb11a794731ed1d95c8020c2”

},

{

“name”: “pd-172.25.10.68-2379”,

“member_id”: 10169717612717421024,

“peer_urls”: [

“http://172.25.10.68:2380”

],

“client_urls”: [

“http://172.25.10.68:2379”

],

“deploy_path”: “/data/tools/tiup/pd-2379/bin”,

“binary_version”: “v4.0.0”,

“git_hash”: “56d4c3d2237f5bf6fb11a794731ed1d95c8020c2”

},

{

“name”: “pd-172.25.10.38-2379”,

“member_id”: 11045507000393770663,

“peer_urls”: [

“http://172.25.10.38:2380”

],

“client_urls”: [

“http://172.25.10.38:2379”

],

“deploy_path”: “/data/tools/tiup/pd-2379/bin”,

“binary_version”: “v4.0.0”,

“git_hash”: “56d4c3d2237f5bf6fb11a794731ed1d95c8020c2”

}

],

“leader”: {

“name”: “pd-172.25.10.39-2379”,

“member_id”: 2581109303334301696,

“peer_urls”: [

“http://172.25.10.39:2380”

],

“client_urls”: [

“http://172.25.10.39:2379”

]

},

“etcd_leader”: {

“name”: “pd-172.25.10.39-2379”,

“member_id”: 2581109303334301696,

“peer_urls”: [

“http://172.25.10.39:2380”

],

“client_urls”: [

“http://172.25.10.39:2379”

],

“deploy_path”: “/data/tools/tiup/pd-2379/bin”,

“binary_version”: “v4.0.0”,

“git_hash”: “56d4c3d2237f5bf6fb11a794731ed1d95c8020c2”

}

}

{

“count”: 5,

“stores”: [

{

“store”: {

“id”: 130237,

“address”: “172.25.10.68:20160”,

“state”: 1,

“version”: “4.0.0”,

“status_address”: “172.25.10.68:20180”,

“git_hash”: “198a2cea01734ce8f46d55a29708f123f9133944”,

“start_timestamp”: 1593765636,

“deploy_path”: “/data/tools/tiup/tikv-20160/bin”,

“last_heartbeat”: 1594973274556563650,

“state_name”: “Offline”

},

“status”: {

“capacity”: “1023GiB”,

“available”: “1011GiB”,

“used_size”: “442.3MiB”,

“leader_count”: 0,

“leader_weight”: 1,

“leader_score”: 0,

“leader_size”: 0,

“region_count”: 33,

“region_weight”: 1,

“region_score”: 2724,

“region_size”: 2724,

“start_ts”: “2020-07-03T16:40:36+08:00”,

“last_heartbeat_ts”: “2020-07-17T16:07:54.55656365+08:00”,

“uptime”: “335h27m18.55656365s”

}

},

{

“store”: {

“id”: 2,

“address”: “172.25.10.71:20160”,

“version”: “4.0.0”,

“status_address”: “172.25.10.71:20180”,

“git_hash”: “198a2cea01734ce8f46d55a29708f123f9133944”,

“start_timestamp”: 1593651804,

“deploy_path”: “/data/tools/tiup/tikv-20160/bin”,

“last_heartbeat”: 1594973275943799278,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.999TiB”,

“available”: “1.974TiB”,

“used_size”: “12.82GiB”,

“leader_count”: 408,

“leader_weight”: 1,

“leader_score”: 408,

“leader_size”: 29522,

“region_count”: 1231,

“region_weight”: 1,

“region_score”: 89108,

“region_size”: 89108,

“start_ts”: “2020-07-02T09:03:24+08:00”,

“last_heartbeat_ts”: “2020-07-17T16:07:55.943799278+08:00”,

“uptime”: “367h4m31.943799278s”

}

},

{

“store”: {

“id”: 90,

“address”: “172.25.10.72:3930”,

“labels”: [

{

“key”: “engine”,

“value”: “tiflash”

}

],

“version”: “v4.0.0”,

“peer_address”: “172.25.10.72:20170”,

“status_address”: “172.25.10.72:20292”,

“git_hash”: “c51c2c5c18860aaef3b5853f24f8e9cefea167eb”,

“start_timestamp”: 1593651770,

“deploy_path”: “/data/tools/tiup/tiflash-9000/bin/tiflash”,

“last_heartbeat”: 1594973279666894791,

“state_name”: “Up”

},

“status”: {

“capacity”: “1023GiB”,

“available”: “991.8GiB”,

“used_size”: “29.97KiB”,

“leader_count”: 0,

“leader_weight”: 1,

“leader_score”: 0,

“leader_size”: 0,

“region_count”: 0,

“region_weight”: 1,

“region_score”: 0,

“region_size”: 0,

“start_ts”: “2020-07-02T09:02:50+08:00”,

“last_heartbeat_ts”: “2020-07-17T16:07:59.666894791+08:00”,

“uptime”: “367h5m9.666894791s”

}

},

{

“store”: {

“id”: 1,

“address”: “172.25.10.70:20160”,

“version”: “4.0.0”,

“status_address”: “172.25.10.70:20180”,

“git_hash”: “198a2cea01734ce8f46d55a29708f123f9133944”,

“start_timestamp”: 1593651799,

“deploy_path”: “/data/tools/tiup/tikv-20160/bin”,

“last_heartbeat”: 1594973275610562552,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.999TiB”,

“available”: “1.976TiB”,

“used_size”: “12.79GiB”,

“leader_count”: 414,

“leader_weight”: 1,

“leader_score”: 414,

“leader_size”: 28735,

“region_count”: 1231,

“region_weight”: 1,

“region_score”: 89108,

“region_size”: 89108,

“start_ts”: “2020-07-02T09:03:19+08:00”,

“last_heartbeat_ts”: “2020-07-17T16:07:55.610562552+08:00”,

“uptime”: “367h4m36.610562552s”

}

},

{

“store”: {

“id”: 7,

“address”: “172.25.10.69:20160”,

“version”: “4.0.0”,

“status_address”: “172.25.10.69:20180”,

“git_hash”: “198a2cea01734ce8f46d55a29708f123f9133944”,

“start_timestamp”: 1593651783,

“deploy_path”: “/data/tools/tiup/tikv-20160/bin”,

“last_heartbeat”: 1594973282269254448,

“state_name”: “Up”

},

“status”: {

“capacity”: “1.999TiB”,

“available”: “1.975TiB”,

“used_size”: “12.65GiB”,

“leader_count”: 409,

“leader_weight”: 1,

“leader_score”: 409,

“leader_size”: 30851,

“region_count”: 1231,

“region_weight”: 1,

“region_score”: 89108,

“region_size”: 89108,

“start_ts”: “2020-07-02T09:03:03+08:00”,

“last_heartbeat_ts”: “2020-07-17T16:08:02.269254448+08:00”,

“uptime”: “367h4m59.269254448s”

}

}

]

}

当 region count 和 leader count 都为 0 此节点就是变为 tombstone 。继续观察吧。

hi,,如何,请问现在集群 io 是否有明显改善呢,

KV缩容后,IO就全部正常了。

不过缩容的那个KV,一直还在region_count从33变成了35了。