最近集群开启了coredump,刚好有个tikv实例又出现此异常生成了core file比较大14GB左右,我把它上传到百度网盘,对应下载地址:https://pan.baidu.com/s/1R6BMkxopRsWfNsuNaAqmNg?pwd=v7yo ,@dbaspace @xfworld @TiDBer_Y3ry8Hgp @Meditator 请各位帮我分析一下这个core file,看具体什么错误导致的本异常?

@xfworld @Meditator @dbaspace @TiDBer_Y3ry8Hgp 各位大佬,本异常的相关跟踪信息及相关可视化监视汇总如下(各位可由此快速定位找到需要的信息,希望对各位帮忙快速解决问题起到帮助作用):

- 问题TiDB版本:v3.0.3

- 问题现象:tikv server一直正常稳定运行,突然发生segfault error=5异常被kill并自动重启。

- 问题发生时间:2021-01-07 01:58

- 问题发生的tikv节点:DC02上20173 tikv实例(DC02上共有2个tikv实例,另一个是20172实例),历史上集群所有tikv节点先后发生的类似异常统计列表如下:

| 时间 | 主机节点 | tikv实例 |

|---|---|---|

| 2021-08-30 07:00 | DC12 | 20171 |

| 2021-09-18 05:12 | DC02 | 20172 |

| 2021-09-27 10:49 | DC10 | 20171 |

| 2021-10-19 18:52 | DC11 | 20172 |

| 2021-11-18 22:01 | DC10 | 20171 |

| 2021-11-20 23:01 | DC10 | 20173 |

| 2020-12-23 12:53 | DC02 | 20172 |

| 2021-01-03 14:55 | DC18 | 20172 |

| 2021-01-06 03:08 | DC17 | 20172 |

| 2021-01-07 01:58 | DC02 | 20173 |

| 2021-01-07 11:00 | DC11 | 20172 |

| 2021-01-07 20:18 | DC11 | 20173 |

| 2022-01-10 01:56 | DC12 | 20171 |

| 2022-01-15 19:04 | DC12 | 20172 |

-

运行环境:

- 系统硬件结构:x86_64

- OS版本: Ubuntu 16.04.3 LTS (GNU/Linux 4.4.0-87-generic x86_64)

-

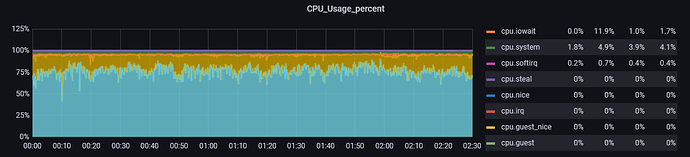

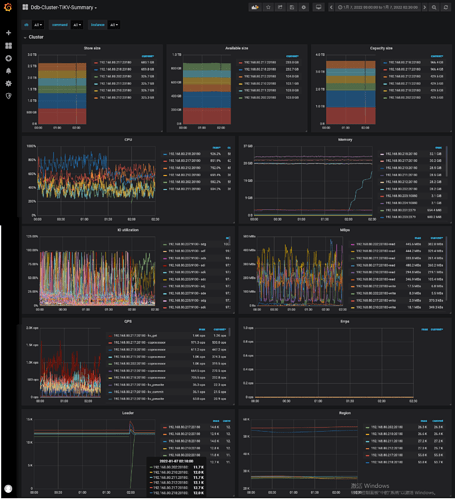

CPU监视图:

-

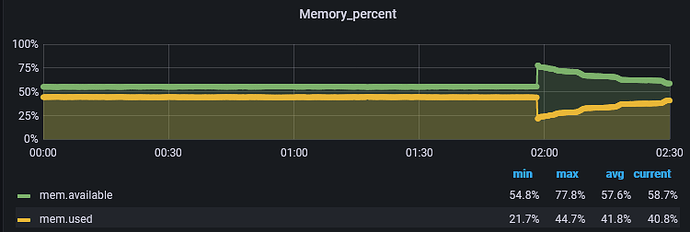

内存监视图:

-

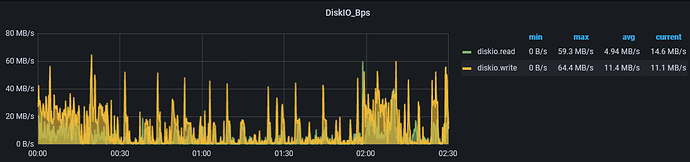

磁盘监视图:

-

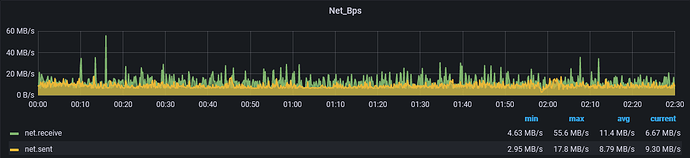

网络监视图:

-

TiKV节点监视图:

- dmesg相关日志: dmesg.out (95.9 KB)

- syslog相关日志:syslog.log (926 字节)

- tikv相关日志:tikv.log (10.4 KB)

- 相关core dump file:core(14.03 GB)

var/log/message 下面没有记录被 kill 的原因吗?

没开启coredump之前是有的,有下面这么几种:

-

deadlock-0[16224]: segfault at ffffffffffff0000 ip 000055d1e36c5c05 sp 00007f21765f8f30 error 5 in tikv-server[55d1e2312000+1804000]

-

grpc-server-1[16184]: segfault at ffffffffffff0000 ip 000055e14a632c05 sp 00007f19badf9f30 error 5 in tikv-server[55e14927f000+1804000]

-

pd-0[8872]: segfault at ffffffffffff0000 ip 000055df6fa57c05 sp 00007f6461577f30 error 5 in tikv-server[55df6e6a4000+1804000]

开启之后产生core文件后,就没这样的打印的估计是退出信号被coredump拦截了。

@spc_monkey @dbaspace @xfworld @Meditator @TiDBer_Y3ry8Hgp 使用GDB分析core打印信息如下:

…

[New LWP 20374]

[New LWP 20345]

[New LWP 20295]

[New LWP 20272]

[New LWP 3199]

[Thread debugging using libthread_db enabled]

Using host libthread_db library “/lib/x86_64-linux-gnu/libthread_db.so.1”.

Core was generated by `bin/tikv-server --addr 0.0.0.0:20172 --advertise-addr 192.168.80.212:20172 --st’.

Program terminated with signal SIGABRT, Aborted.

#0 0x00007f54c61c6428 in raise () from /lib/x86_64-linux-gnu/libc.so.6

warning: Missing auto-load scripts referenced in section .debug_gdb_scripts

在gdb 中,敲下 bt 看看更多信息呢

gdb bt后打印信息如下:

(gdb) bt

Python Exception <class ‘ImportError’> No module named ‘gdb.frames’:

#0 0x00007f54c61c6428 in raise () from /lib/x86_64-linux-gnu/libc.so.6

#1 0x00007f54c61c802a in abort () from /lib/x86_64-linux-gnu/libc.so.6

#2 0x0000558b804176ab in perform_stream_op_locked (stream_op=0x7f5414a64368, error_ignored=)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/ext/transport/chttp2/transport/chttp2_transport.cc:1641

#3 0x0000558b803df19e in exec_ctx_run (closure=, error=0x0)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/iomgr/exec_ctx.cc:40

#4 0x0000558b80416f78 in perform_stream_op_locked (stream_op=0x7f5414a64368, error_ignored=)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/ext/transport/chttp2/transport/chttp2_transport.cc:1676

#5 0x0000558b80409715 in grpc_combiner_continue_exec_ctx ()

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/iomgr/combiner.cc:260

#6 0x0000558b803df3ef in grpc_core::ExecCtx::Flush (this=0x7f54761fb540)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/iomgr/exec_ctx.cc:137

#7 0x0000558b804c5aa0 in end_worker (worker_hdl=, worker=0x7f54761fb040, pollset=)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/iomgr/ev_epoll1_linux.cc:947

#8 pollset_work (ps=, worker_hdl=, deadline=)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/iomgr/ev_epoll1_linux.cc:1047

#9 0x0000558b803f1e0d in cq_next (cq=0x7f549f6d7700, deadline=…, reserved=)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/surface/completion_queue.cc:1040

#10 0x0000558b803f267b in grpc_completion_queue_next (cq=, deadline=…, reserved=)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/surface/completion_queue.cc:1115

#11 0x0000558b7fedaeee in next (self=) at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-0.4.2/src/cq.rs:150

#12 poll_queue (cq=…) at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-0.4.2/src/env.rs:28

#13 {{closure}} () at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-0.4.2/src/env.rs:89

#14 std::sys_common::backtrace::__rust_begin_short_backtrace::hd079d5c938b01150 (f=…)

at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/sys_common/backtrace.rs:77

#15 0x0000558b7fedadbd in {{closure}}<closure,()> () at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/thread/mod.rs:470

#16 call_once<(),closure> (self=…, _args=) at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/panic.rs:309

#17 do_call<std::panic::AssertUnwindSafe,()> (data=) at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/panicking.rs:294

#18 __rust_maybe_catch_panic () at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250//src/libpanic_abort/lib.rs:29

#19 try<(),std::panic::AssertUnwindSafe> (f=…) at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/panicking.rs:273

#20 catch_unwind<std::panic::AssertUnwindSafe,()> (f=…) at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/panic.rs:388

#21 {{closure}}<closure,()> () at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/thread/mod.rs:469

#22 core::ops::function::FnOnce::call_once$u7b$$u7b$vtable.shim$u7d$$u7d$::h05971589d154f23d ()

at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libcore/ops/function.rs:231

#23 0x0000558b80061aaf in _$LT$alloc…boxed…Box$LT$F$GT$$u20$as$u20$core…ops…function…FnOnce$LT$A$GT$$GT$::call_once::he71721d2d956d451 ()

at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/liballoc/boxed.rs:746

#24 0x0000558b80063ddc in std::sys::unix::thread_start::h18485805666ccd3c ()

at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/liballoc/boxed.rs:746

#25 0x00007f54c6b846ba in start_thread () from /lib/x86_64-linux-gnu/libpthread.so.0

#26 0x00007f54c62983dd in clone () from /lib/x86_64-linux-gnu/libc.so.6

收到了

应该是这个问题: https://github.com/grpc/grpc/issues/16876,建议考虑一下 升个级( 你的版本,有点太久了,其实现在已经不维护了的)

@flyingfish 问一下 还有出问题前后一小时的 tikv 日志不,能发一下不

tikv.log (10.4 KB) 这个就是那个问题tikv实例的前后半小时内的日志

日志级别你们调整了吗,咋这么少(帮说明一下:这个时间段,咱们有没有做过什么操作不,还有这个 tikv 重启之后,还会再次发生类似问题吗?以及咱们这台服务区上,都有哪些 实例?)

我们为了减少日志存储,tikv实例的日志级别都统一是warn级别,发生这个异常是在凌晨夜里没有什么人工和数据库进行交互只有后台的数据同步程序会定期往数据库提交插入数据,这个服务器上除了它还有个20172实例,这个tikv实例自从这次2021-01-07 01:58发生本异常重启后一直稳定正常运行还没再发生类似异常重启,其他节点因本异常重启的统计看参见前面的统计汇总。

@spc_monkey 今天下午又有另一个服务器节点上的tikv实例报出了类似异常,syslog中打印的信息是“ deadlock-0[158756]: segfault at ffffffffffff0000 ip 0000558260572c05 sp 00007f39e83f8f30 error 5 in tikv-server[55825f1bf000+1804000]” ,gdb bt后打印信息如下:

(gdb) bt

#0 gpr_mpscq_pop_and_check_end (q=q@entry=0x7f3a46e0da18, empty=empty@entry=0x7f39e83f8f5f)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/gpr/mpscq.cc:51

#1 0x0000558260572c8e in gpr_mpscq_pop (q=q@entry=0x7f3a46e0da18)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/gpr/mpscq.cc:46

#2 0x00005582605796fe in grpc_combiner_continue_exec_ctx ()

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/iomgr/combiner.cc:244

#3 0x000055826054f3ef in grpc_core::ExecCtx::Flush (this=0x7f39e83f9540)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/iomgr/exec_ctx.cc:137

#4 0x0000558260635aa0 in end_worker (worker_hdl=, worker=0x7f39e83f9040, pollset=)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/iomgr/ev_epoll1_linux.cc:947

#5 pollset_work (ps=, worker_hdl=, deadline=)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/iomgr/ev_epoll1_linux.cc:1047

#6 0x0000558260561e0d in cq_next (cq=0x7f39f1991600, deadline=…, reserved=)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/surface/completion_queue.cc:1040

#7 0x000055826056267b in grpc_completion_queue_next (cq=, deadline=…, reserved=)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-sys-0.4.2/grpc/src/core/lib/surface/completion_queue.cc:1115

#8 0x000055826004aeee in grpcio::cq::CompletionQueue::next::haf34aafada444a54 (self=)

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-0.4.2/src/cq.rs:150

#9 grpcio::env::poll_queue::h6adef79f38b98ee3 (cq=…) at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-0.4.2/src/env.rs:28

#10 grpcio::env::EnvBuilder::build::$u7b$$u7b$closure$u7d$$u7d$::h3044f6b093ba42a2 ()

at /rust/registry/src/github.com-1ecc6299db9ec823/grpcio-0.4.2/src/env.rs:89

#11 std::sys_common::backtrace::__rust_begin_short_backtrace::hd079d5c938b01150 (f=…)

at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/sys_common/backtrace.rs:77

#12 0x000055826004adbd in std::Builder::spawn_unchecked::$u7b$$u7b$closure$u7d$$u7d$::_$u7b$$u7b$closure$u7d$$u7d$::h8ffbcaaec80ba74f ()

at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/thread/mod.rs:470

#13 _$LT$std…panic…AssertUnwindSafe$LT$F$GT$$u20$as$u20$core…ops…function…FnOnce$LT$$LP$$RP$$GT$$GT$::call_once::he660d6025d7955f2 (self=…,

args=) at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/panic.rs:309

#14 std::panicking::try::do_call::hba669f49d310757a (data=) at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/panicking.rs:294

#15 __rust_maybe_catch_panic () at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250//src/libpanic_abort/lib.rs:29

#16 std::panicking::try::h28614e118e15c912 (f=…) at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/panicking.rs:273

#17 std::panic::catch_unwind::hae36d9a202084c6f (f=…) at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/panic.rs:388

#18 std::Builder::spawn_unchecked::$u7b$$u7b$closure$u7d$$u7d$::h111b88860ea14bd5 ()

at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libstd/thread/mod.rs:469

#19 core::ops::function::FnOnce::call_once$u7b$$u7b$vtable.shim$u7d$$u7d$::h05971589d154f23d ()

at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/libcore/ops/function.rs:231

#20 0x00005582601d1aaf in _$LT$alloc…boxed…Box$LT$F$GT$$u20$as$u20$core…ops…function…FnOnce$LT$A$GT$$GT$::call_once::he71721d2d956d451 ()

at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/liballoc/boxed.rs:746

#21 0x00005582601d3ddc in std::sys::unix::thread_start::h18485805666ccd3c ()

at /rustc/0e4a56b4b04ea98bb16caada30cb2418dd06e250/src/liballoc/boxed.rs:746

#22 0x00007f3a97c316ba in start_thread () from /lib/x86_64-linux-gnu/libpthread.so.0

#23 0x00007f3a973453dd in clone () from /lib/x86_64-linux-gnu/libc.so.6

你再结合这个消息看看是不是还有别的错误原因?另外还有个重要信息就是 我们的操作系统是Ubuntu Server 16.04, 网上查了这个版本是不支持EPOLLEXCLUSIVE的,后面稍新的版本(如16.10)才支持,看这个是不是也会诱发此异常?

内核 访问 ffffffffffff0000 发生错误,该错误不是产生在用户态,个人目前倾向于 不是 tikv 的问题,怀疑是 kernel 问题。

这个需要 获取 /proc/pid/maps 查看内存分布、tikv 日志、操作系统日志,可以的话都给我下,出问题前后的半小时

上面是我们研发同学看了之后的结论,如果方便,可以提供一下上面的信息

- maps (对应是重启后新进程,原来崩溃进程对应maps没有了) : maps (32.5 KB)

- tikv日志: tikv.log (5.7 KB)

- syslogs日志:syslog.log (976 字节)

- dmesg: dmesg.out (127.9 KB)

好的,我让硬件运维的同事也帮忙看一下。

如果是kernal问题会不会和Ubuntu Server 16.04这个版本不支持 EPOLLEXCLUSIVE 有关呢?