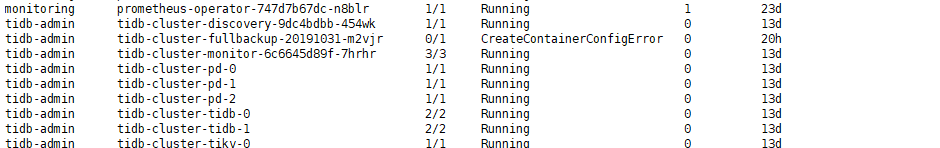

在k8s集群部署tidb

tidb-backup启动出错

[root@worker10 tidb-backup]# kubectl logs tidb-cluster-fullbackup-20191031-m2vjr -n tidb-admin

Error from server (BadRequest): container "backup" in pod "tidb-cluster-fullbackup-20191031-m2vjr" is waiting to start: CreateContainerConfigError

[root@worker10 tidb-backup]#

[root@worker10 tidb-backup]# kubectl describe pods tidb-cluster-fullbackup-20191031-m2vjr -n tidb-admin

Name: tidb-cluster-fullbackup-20191031-m2vjr

Namespace: tidb-admin

Node: 10.1.52.29/10.1.52.29

Start Time: Thu, 31 Oct 2019 13:46:06 +0800

Labels: app.kubernetes.io/component=backup

app.kubernetes.io/instance=denal-tidb-backup

app.kubernetes.io/managed-by=Tiller

app.kubernetes.io/name=tidb-backup

controller-uid=718a0f2e-fba1-11e9-95ef-18602480f56a

helm.sh/chart=tidb-backup-v1.0.0

job-name=tidb-cluster-fullbackup-20191031

Annotations: <none>

Status: Pending

IP: 172.20.22.7

Controlled By: Job/tidb-cluster-fullbackup-20191031

Containers:

backup:

Container ID:

Image: pingcap/tidb-cloud-backup:20190610

Image ID:

Port: <none>

Host Port: <none>

Command:

/bin/sh

-c

set -euo pipefail

host=$(getent hosts tidb-cluster-tidb | head | awk '{print $1}')

dirname=/data/${BACKUP_NAME}

echo "making dir ${dirname}"

mkdir -p ${dirname}

password_str=""

if [ -n "${TIDB_PASSWORD}" ];

then

password_str="-p${TIDB_PASSWORD}"

fi

gc_life_time=`/usr/bin/mysql -h${host} -P4000 -u${TIDB_USER} ${password_str} -Nse "select variable_value from mysql.tidb where variable_name='tikv_gc_life_time';"`

echo "Old TiKV GC life time is ${gc_life_time}"

echo "Increase TiKV GC life time to 3h"

/usr/bin/mysql -h${host} -P4000 -u${TIDB_USER} ${password_str} -Nse "update mysql.tidb set variable_value='3h' where variable_name='tikv_gc_life_time';"

/usr/bin/mysql -h${host} -P4000 -u${TIDB_USER} ${password_str} -Nse "select variable_name,variable_value from mysql.tidb where variable_name='tikv_gc_life_time';"

if [ -n "" ];

then

snapshot_args="--tidb-snapshot="

echo "commitTS = " > ${dirname}/savepoint

cat ${dirname}/savepoint

fi

/mydumper

--outputdir=${dirname}

--host=${host}

--port=4000

--user=${TIDB_USER}

--password=${TIDB_PASSWORD}

--long-query-guard=3600

--tidb-force-priority=LOW_PRIORITY

--verbose=3 ${snapshot_args:-}

echo "Reset TiKV GC life time to ${gc_life_time}"

/usr/bin/mysql -h${host} -P4000 -u${TIDB_USER} ${password_str} -Nse "update mysql.tidb set variable_value='${gc_life_time}' where variable_name='tikv_gc_life_time';"

/usr/bin/mysql -h${host} -P4000 -u${TIDB_USER} ${password_str} -Nse "select variable_name,variable_value from mysql.tidb where variable_name='tikv_gc_life_time';"

State: Waiting

Reason: CreateContainerConfigError

Ready: False

Restart Count: 0

Environment:

BACKUP_NAME: fullbackup-20191031

TIDB_USER: <set to the key 'user' in secret 'backup-secret'> Optional: false

TIDB_PASSWORD: <set to the key 'password' in secret 'backup-secret'> Optional: false

Mounts:

/data from data (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-brsjh (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

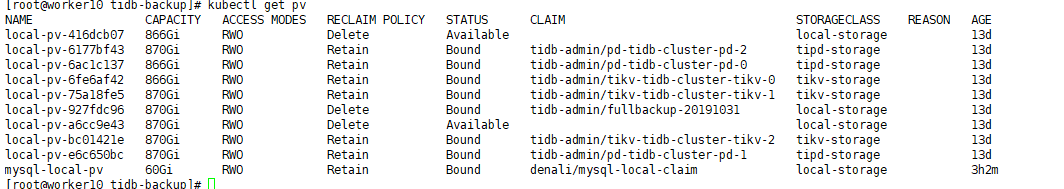

Volumes:

data:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: fullbackup-20191031

ReadOnly: false

default-token-brsjh:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-brsjh

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Pulled 0s (x5608 over 20h) kubelet, 10.1.52.29 Container image "pingcap/tidb-cloud-backup:20190610" already present on machine