每台TiKV服务器3块SSD,水平都差不多:

fio Pass IOPS of random read: 66534.010152

fio Pass IOPS of random read: 17758.630063, write: 17868.442512

fio Pass Latency of random read: 156743.568944ns, write: 25442.079436ns

permission Pass /mnt/disk5/tikv/deploy/data is writable

fio Pass IOPS of random read: 66500.253678

fio Pass IOPS of random read: 17380.686353, write: 17488.161745

fio Pass Latency of random read: 154928.353020ns, write: 24432.604653ns

permission Pass /mnt/disk6/tikv/deploy/data is writable

fio Pass IOPS of random read: 52660.506227

fio Pass IOPS of random read: 13847.816872, write: 13933.446376

fio Pass Latency of random read: 226612.086089ns, write: 24528.027354ns

permission Pass /mnt/disk4/tikv/deploy/da

另外调整:apply-pool-size=4,raftstore.apply-max-batch-size=2048 重新测试后,发现:

-

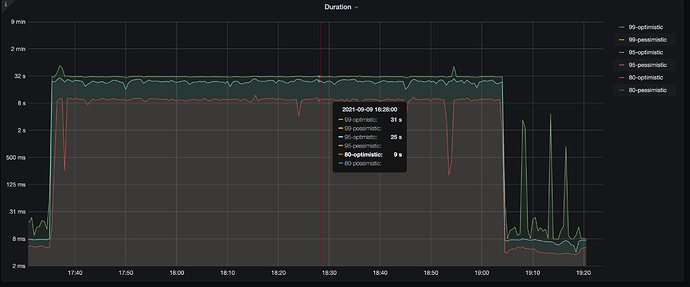

transaction duration 下降到 30s 左右:

-

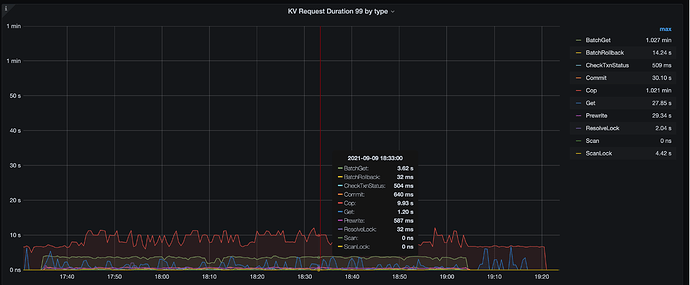

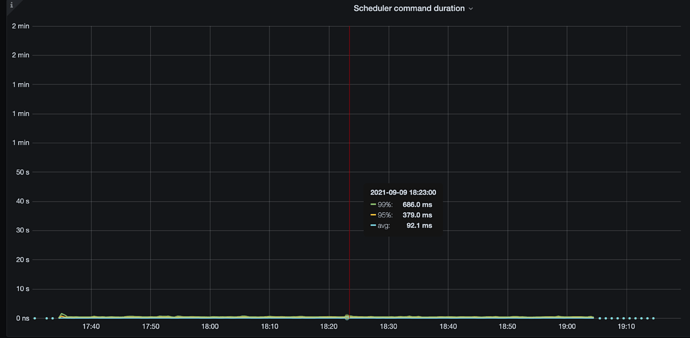

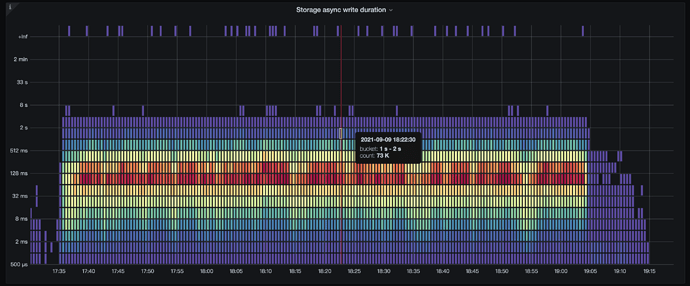

主要耗时在 batch_get 和 coprocessor(和grpc duration显示一致,另外 scheduduler priwrite duration 和 aysync write duration 都较低):

-

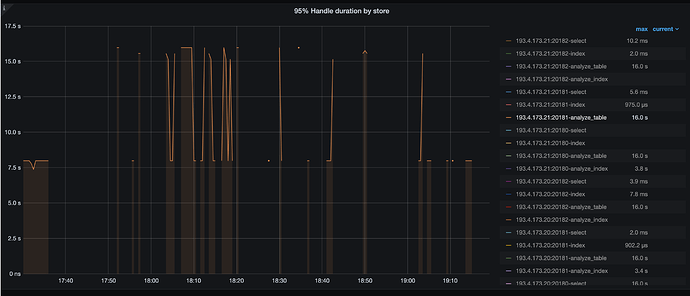

coprocessor detail → 95% handle duration by store 显示所有 TiKV 节点的 analyze_table 最大16s,每个节点的线都不连续:

-

不过 coprocessor overview 中看起来 ops 和耗时都很低:

请问这个情况应该如何判断?可以看出原因吗?