这个问题没有解决。我感觉有点像一个bug。按照你们之前说的 可能我的版本跨度太大,我就尝试了下4.0.7升级到5.1.0 然后再安装tiflash,故障跟上面的还是一样。

dashboard里面还是拿不到数据,其他都显示正常,使用感觉也正常。

这个问题没有解决。我感觉有点像一个bug。按照你们之前说的 可能我的版本跨度太大,我就尝试了下4.0.7升级到5.1.0 然后再安装tiflash,故障跟上面的还是一样。

dashboard里面还是拿不到数据,其他都显示正常,使用感觉也正常。

有清理过浏览器缓存吗? dashboard 相关展示 是 js脚本。如果 是 chrome 有可能有 js 缓存

清理过,一样的

库里:

MySQL [(none)]> select * from INFORMATION_SCHEMA.CLUSTER_HARDWARE where TYPE=‘tiflash’;

Empty set, 2 warnings (0.02 sec)

MySQL [(none)]> select * from INFORMATION_SCHEMA.CLUSTER_LOAD where TYPE=‘tiflash’;

±--------±------------------±------------±------------±-------------±------------+

| TYPE | INSTANCE | DEVICE_TYPE | DEVICE_NAME | NAME | VALUE |

±--------±------------------±------------±------------±-------------±------------+

| tiflash | 10.103.6.114:3930 | cpu | cpu | load1 | 0.020000 |

| tiflash | 10.103.6.114:3930 | cpu | cpu | load5 | 0.060000 |

| tiflash | 10.103.6.114:3930 | cpu | cpu | load15 | 0.060000 |

| tiflash | 10.103.6.114:3930 | cpu | usage | user | 0.00 |

| tiflash | 10.103.6.114:3930 | cpu | usage | nice | 0.00 |

| tiflash | 10.103.6.114:3930 | cpu | usage | system | 0.00 |

| tiflash | 10.103.6.114:3930 | cpu | usage | idle | 1.00 |

| tiflash | 10.103.6.114:3930 | cpu | usage | iowait | 0.00 |

| tiflash | 10.103.6.114:3930 | cpu | usage | irq | 0.00 |

| tiflash | 10.103.6.114:3930 | cpu | usage | softirq | 0.00 |

| tiflash | 10.103.6.114:3930 | cpu | usage | steal | 0.00 |

| tiflash | 10.103.6.114:3930 | cpu | usage | guest | 0.00 |

| tiflash | 10.103.6.114:3930 | cpu | usage | guest_nice | 0.00 |

| tiflash | 10.103.6.114:3930 | memory | swap | total | 0 |

| tiflash | 10.103.6.114:3930 | memory | swap | free | 0 |

| tiflash | 10.103.6.114:3930 | memory | swap | used | 0 |

| tiflash | 10.103.6.114:3930 | memory | swap | free-percent | -nan |

| tiflash | 10.103.6.114:3930 | memory | swap | used-percent | -nan |

| tiflash | 10.103.6.114:3930 | memory | virtual | total | 33567223808 |

| tiflash | 10.103.6.114:3930 | memory | virtual | free | 30795001856 |

| tiflash | 10.103.6.114:3930 | memory | virtual | used | 1879453696 |

| tiflash | 10.103.6.114:3930 | memory | virtual | free-percent | 0.92 |

| tiflash | 10.103.6.114:3930 | memory | virtual | used-percent | 0.06 |

| tiflash | 10.103.6.113:3930 | cpu | cpu | load1 | 0.010000 |

| tiflash | 10.103.6.113:3930 | cpu | cpu | load5 | 0.090000 |

| tiflash | 10.103.6.113:3930 | cpu | cpu | load15 | 0.080000 |

| tiflash | 10.103.6.113:3930 | cpu | usage | user | 0.00 |

| tiflash | 10.103.6.113:3930 | cpu | usage | nice | 0.00 |

| tiflash | 10.103.6.113:3930 | cpu | usage | system | 0.00 |

| tiflash | 10.103.6.113:3930 | cpu | usage | idle | 1.00 |

| tiflash | 10.103.6.113:3930 | cpu | usage | iowait | 0.00 |

| tiflash | 10.103.6.113:3930 | cpu | usage | irq | 0.00 |

| tiflash | 10.103.6.113:3930 | cpu | usage | softirq | 0.00 |

| tiflash | 10.103.6.113:3930 | cpu | usage | steal | 0.00 |

| tiflash | 10.103.6.113:3930 | cpu | usage | guest | 0.00 |

| tiflash | 10.103.6.113:3930 | cpu | usage | guest_nice | 0.00 |

| tiflash | 10.103.6.113:3930 | memory | swap | total | 0 |

| tiflash | 10.103.6.113:3930 | memory | swap | free | 0 |

| tiflash | 10.103.6.113:3930 | memory | swap | used | 0 |

| tiflash | 10.103.6.113:3930 | memory | swap | free-percent | -nan |

| tiflash | 10.103.6.113:3930 | memory | swap | used-percent | -nan |

| tiflash | 10.103.6.113:3930 | memory | virtual | total | 33567223808 |

| tiflash | 10.103.6.113:3930 | memory | virtual | free | 26071912448 |

| tiflash | 10.103.6.113:3930 | memory | virtual | used | 2201853952 |

| tiflash | 10.103.6.113:3930 | memory | virtual | free-percent | 0.78 |

| tiflash | 10.103.6.113:3930 | memory | virtual | used-percent | 0.07 |

±--------±------------------±------------±------------±-------------±------------+

46 rows in set (1.02 sec)

看下你 chrome 控制台 在 主机页面是否有 js 的 error log 打出

如果有可以 完整反馈下

看console 呢?

在当前页面重新刷新下页面 看 console 是否有报错打出

刷新过,没有报错

可以试下 滚动重启下 pd

tiup cluster reload clustername -r pd

滚动重启过,整个集群都重启了,还是不行

{,…}

hosts:[{host: “10.103.6.111”, cpu_info: {arch: “amd64”, logical_cores: 16, physical_cores: 16},…},…]

0:{host: “10.103.6.111”, cpu_info: {arch: “amd64”, logical_cores: 16, physical_cores: 16},…}

1:{host: “10.103.6.112”, cpu_info: {arch: “amd64”, logical_cores: 16, physical_cores: 16},…}

2:{host: “10.103.6.113”, cpu_info: {arch: “amd64”, logical_cores: 16, physical_cores: 16},…}

3:{host: “10.103.6.114”, cpu_info: null, cpu_usage: {idle: 0.97, system: 0},…}

4:{host: “10.103.6.91”, cpu_info: {arch: “amd64”, logical_cores: 16, physical_cores: 16},…}

5:{host: “10.103.6.92”, cpu_info: {arch: “amd64”, logical_cores: 16, physical_cores: 16},…}

6:{host: “10.103.6.93”, cpu_info: {arch: “amd64”, logical_cores: 16, physical_cores: 16},…}

warning:null

其中10.103.6.114的具体信息如下:

{host: “10.103.6.114”, cpu_info: null, cpu_usage: {idle: 0.97, system: 0},…}

cpu_info:null

cpu_usage:{idle: 0.97, system: 0}

host:“10.103.6.114”

instances:{}

memory_usage:{used: 2302750720, total: 33567223808}

partitions:{}

从代码上看 你的 113 能展示 是因为 他同时也是 PD 节点 所以相关 hardware 信息 展示了出来

114 单独部署的 tiflash 还要具体看为什么 hardware 信息 为什么没有抓取到

warning信息如下:

±--------±-----±------------------------------------------------------------------+

| Level | Code | Message |

±--------±-----±------------------------------------------------------------------+

| Warning | 1105 | rpc error: code = Unknown desc = Unexpected error in RPC handling |

| Warning | 1105 | rpc error: code = Unknown desc = Unexpected error in RPC handling |

±--------±-----±------------------------------------------------------------------+

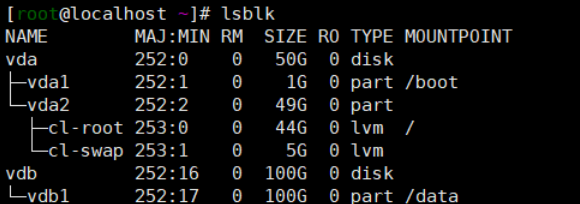

有没有可能是因为盘符的原因?

检查下你连接到的 TIDB 的 tidb.log 是否有查询 hardware 信息的 相关 报错信息

未发现关于hardware信息的相关报错,中途可能有一些重启集群导致的报错。tidb的日志如下:tidb.log (6.8 MB) tidb (2).log (2.9 MB)

在查询select * from INFORMATION_SCHEMA.CLUSTER_HARDWARE where TYPE=‘tiflash’;的时候 tidb.log有如下日志:

[2021/09/08 19:20:26.417 +08:00] [INFO] [grpclogger.go:77] [“parsed scheme: ""”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:26.417 +08:00] [INFO] [grpclogger.go:77] [“scheme "" not registered, fallback to default scheme”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:26.417 +08:00] [INFO] [grpclogger.go:77] [“ccResolverWrapper: sending update to cc: {[{10.103.6.113:3930 0 }] }”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:26.417 +08:00] [INFO] [grpclogger.go:77] [“parsed scheme: ""”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:26.417 +08:00] [INFO] [grpclogger.go:77] [“ClientConn switching balancer to "pick_first"”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:26.417 +08:00] [INFO] [grpclogger.go:77] [“scheme "" not registered, fallback to default scheme”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:26.417 +08:00] [INFO] [grpclogger.go:77] [“ccResolverWrapper: sending update to cc: {[{10.103.6.114:3930 0 }] }”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:26.417 +08:00] [INFO] [grpclogger.go:77] [“blockingPicker: the picked transport is not ready, loop back to repick”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:26.417 +08:00] [INFO] [grpclogger.go:77] [“ClientConn switching balancer to "pick_first"”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:26.417 +08:00] [INFO] [grpclogger.go:77] [“blockingPicker: the picked transport is not ready, loop back to repick”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:26.420 +08:00] [INFO] [grpclogger.go:77] [“attempt to delete child with id 4698 from a parent (id=4696) that doesn’t currently exist”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:26.420 +08:00] [INFO] [grpclogger.go:77] [“attempt to delete child with id 4698 from a parent (id=4696) that doesn’t currently exist”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:26.421 +08:00] [INFO] [grpclogger.go:77] [“attempt to delete child with id 4697 from a parent (id=4695) that doesn’t currently exist”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:26.421 +08:00] [INFO] [grpclogger.go:77] [“attempt to delete child with id 4697 from a parent (id=4695) that doesn’t currently exist”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:28.063 +08:00] [INFO] [grpclogger.go:77] [“ccResolverWrapper: sending new addresses to cc: [{http://10.103.6.113:2379 0 } {http://10.103.6.111:2379 0 } {http://10.103.6.112:2379 0 }]”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:37.184 +08:00] [INFO] [grpclogger.go:77] [“ccResolverWrapper: sending new addresses to cc: [{http://10.103.6.113:2379 0 } {http://10.103.6.111:2379 0 } {http://10.103.6.112:2379 0 }]”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:51.909 +08:00] [WARN] [grpclogger.go:85] [“grpc: Server.Serve failed to create ServerTransport: connection error: desc = "transport: http2Server.HandleStreams failed to receive the preface from client: EOF"”] [system=grpc] [grpc_log=true]

[2021/09/08 19:20:58.065 +08:00] [INFO] [grpclogger.go:77] [“ccResolverWrapper: sending new addresses to cc: [{http://10.103.6.113:2379 0 } {http://10.103.6.111:2379 0 } {http://10.103.6.112:2379 0 }]”] [system=grpc] [grpc_log=true]

[2021/09/08 19:21:07.185 +08:00] [INFO] [grpclogger.go:77] [“ccResolverWrapper: sending new addresses to cc: [{http://10.103.6.113:2379 0 } {http://10.103.6.111:2379 0 } {http://10.103.6.112:2379 0 }]”] [system=grpc] [grpc_log=true]

确认下 你对应 114 的 node_export 和 blackbox 是否正常启动了。登录 对应 host 服务器确认下

启动了 全是info的信息如下:

[root@localhost log]# more blackbox_exporter.log

level=info ts=2021-09-08T06:37:35.800793867Z caller=main.go:213 msg=“Starting blackbox_exporter” version=“(version=0.12.0, branch=HEAD, revision=4a22506cf0cf139d9b2f9cde099f0

012d9fcabde)”

level=info ts=2021-09-08T06:37:35.801364777Z caller=main.go:220 msg=“Loaded config file”

level=info ts=2021-09-08T06:37:35.801449296Z caller=main.go:324 msg=“Listening on address” address=:9115

level=info ts=2021-09-08T09:09:15.59419779Z caller=main.go:213 msg=“Starting blackbox_exporter” version=“(version=0.12.0, branch=HEAD, revision=4a22506cf0cf139d9b2f9cde099f00

12d9fcabde)”

level=info ts=2021-09-08T09:09:15.594828313Z caller=main.go:220 msg=“Loaded config file”

level=info ts=2021-09-08T09:09:15.594977968Z caller=main.go:324 msg=“Listening on address” address=:9115

[root@localhost log]# more node_exporter.log

time=“2021-09-08T14:37:35+08:00” level=info msg=“Starting node_exporter (version=0.17.0, branch=HEAD, revision=f6f6194a436b9a63d0439abc585c76b19a206b21)” source=“node_exporte

r.go:82”

time=“2021-09-08T14:37:35+08:00” level=info msg=“Build context (go=go1.11.2, user=root@322511e06ced, date=20181130-15:51:33)” source=“node_exporter.go:83”

time=“2021-09-08T14:37:35+08:00” level=info msg=“Enabled collectors:” source=“node_exporter.go:90”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - arp" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - bcache" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - bonding" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - buddyinfo" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - conntrack" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - cpu" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - diskstats" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - edac" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - entropy" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - filefd" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - filesystem" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - hwmon" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - infiniband" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - interrupts" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - ipvs" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - loadavg" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - mdadm" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - meminfo" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - meminfo_numa" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - mountstats" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - netclass" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - netdev" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - netstat" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - nfs" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - nfsd" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - sockstat" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - stat" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - systemd" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - tcpstat" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - textfile" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - time" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - timex" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - uname" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - vmstat" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - xfs" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=" - zfs" source=“node_exporter.go:97”

time=“2021-09-08T14:37:35+08:00” level=info msg=“Listening on :9100” source=“node_exporter.go:111”

time=“2021-09-08T17:09:15+08:00” level=info msg=“Starting node_exporter (version=0.17.0, branch=HEAD, revision=f6f6194a436b9a63d0439abc585c76b19a206b21)” source=“node_exporte

r.go:82”

time=“2021-09-08T17:09:15+08:00” level=info msg=“Build context (go=go1.11.2, user=root@322511e06ced, date=20181130-15:51:33)” source=“node_exporter.go:83”

time=“2021-09-08T17:09:15+08:00” level=info msg=“Enabled collectors:” source=“node_exporter.go:90”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - arp" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - bcache" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - bonding" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - buddyinfo" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - conntrack" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - cpu" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - diskstats" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - edac" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - entropy" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - filefd" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - filesystem" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - hwmon" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - infiniband" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - interrupts" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - ipvs" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - loadavg" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - mdadm" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - meminfo" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - meminfo_numa" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - mountstats" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - netclass" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - netdev" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - netstat" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - nfs" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - nfsd" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - sockstat" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - stat" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - systemd" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - tcpstat" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - textfile" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - time" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - timex" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - uname" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - vmstat" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - xfs" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=" - zfs" source=“node_exporter.go:97”

time=“2021-09-08T17:09:15+08:00” level=info msg=“Listening on :9100” source=“node_exporter.go:111”