查询

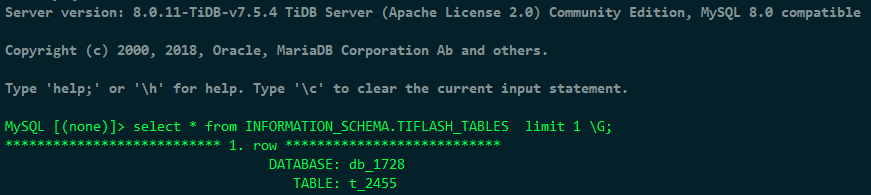

mysql> select * from INFORMATION_SCHEMA.TIFLASH_TABLES limit 1\G

ERROR 1105 (HY000): rpc error: code = DeadlineExceeded desc = context deadline exceeded

详细的错误日志如下:

[2024/11/28 15:37:00.441 +08:00] [INFO] [conn.go:1131] ["command dispatched failed"] [conn=851670586] [session_alias=] [connInfo="id:851670586, addr:172.18.251.224:43088 status:10, collation:utf8mb4_0900_ai_ci, user:root"] [command=Query] [status="inTxn:0, autocommit:1"] [sql="select * from INFORMATION_SCHEMA.TIFLASH_TABLES limit 1"] [txn_mode=PESSIMISTIC] [timestamp=0] [err="rpc error: code = DeadlineExceeded desc = context deadline exceeded\ngithub.com/tikv/client-go/v2/tikvrpc.CallRPC\n\t/root/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.8-0.20240920100427-3725b31fa3c0/tikvrpc/tikvrpc.go:1095\ngithub.com/tikv/client-go/v2/internal/client.(*RPCClient).sendRequest\n\t/root/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.8-0.20240920100427-3725b31fa3c0/internal/client/client.go:654\ngithub.com/tikv/client-go/v2/internal/client.(*RPCClient).SendRequest\n\t/root/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.8-0.20240920100427-3725b31fa3c0/internal/client/client.go:669\ngithub.com/pingcap/tidb/pkg/store/driver.(*injectTraceClient).SendRequest\n\t/workspace/source/tidb/pkg/store/driver/tikv_driver.go:431\ngithub.com/tikv/client-go/v2/internal/client.interceptedClient.SendRequest\n\t/root/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.8-0.20240920100427-3725b31fa3c0/internal/client/client_interceptor.go:60\ngithub.com/tikv/client-go/v2/internal/client.reqCollapse.SendRequest\n\t/root/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.8-0.20240920100427-3725b31fa3c0/internal/client/client_collapse.go:74\ngithub.com/pingcap/tidb/pkg/executor.(*TiFlashSystemTableRetriever).dataForTiFlashSystemTables\n\t/workspace/source/tidb/pkg/executor/infoschema_reader.go:3083\ngithub.com/pingcap/tidb/pkg/executor.(*TiFlashSystemTableRetriever).retrieve\n\t/workspace/source/tidb/pkg/executor/infoschema_reader.go:3008\ngithub.com/pingcap/tidb/pkg/executor.(*MemTableReaderExec).Next\n\t/workspace/source/tidb/pkg/executor/memtable_reader.go:119\ngithub.com/pingcap/tidb/pkg/executor/internal/exec.Next\n\t/workspace/source/tidb/pkg/executor/internal/exec/executor.go:283\ngithub.com/pingcap/tidb/pkg/executor.(*LimitExec).Next\n\t/workspace/source/tidb/pkg/executor/executor.go:1369\ngithub.com/pingcap/tidb/pkg/executor/internal/exec.Next\n\t/workspace/source/tidb/pkg/executor/internal/exec/executor.go:283\ngithub.com/pingcap/tidb/pkg/executor.(*ExecStmt).next\n\t/workspace/source/tidb/pkg/executor/adapter.go:1216\ngithub.com/pingcap/tidb/pkg/executor.(*recordSet).Next\n\t/workspace/source/tidb/pkg/executor/adapter.go:156\ngithub.com/pingcap/tidb/pkg/server/internal/resultset.(*tidbResultSet).Next\n\t/workspace/source/tidb/pkg/server/internal/resultset/resultset.go:62\ngithub.com/pingcap/tidb/pkg/server.(*clientConn).writeChunks\n\t/workspace/source/tidb/pkg/server/conn.go:2290\ngithub.com/pingcap/tidb/pkg/server.(*clientConn).writeResultSet\n\t/workspace/source/tidb/pkg/server/conn.go:2233\ngithub.com/pingcap/tidb/pkg/server.(*clientConn).handleStmt\n\t/workspace/source/tidb/pkg/server/conn.go:2101\ngithub.com/pingcap/tidb/pkg/server.(*clientConn).handleQuery\n\t/workspace/source/tidb/pkg/server/conn.go:1838\ngithub.com/pingcap/tidb/pkg/server.(*clientConn).dispatch\n\t/workspace/source/tidb/pkg/server/conn.go:1325\ngithub.com/pingcap/tidb/pkg/server.(*clientConn).Run\n\t/workspace/source/tidb/pkg/server/conn.go:1098\ngithub.com/pingcap/tidb/pkg/server.(*Server).onConn\n\t/workspace/source/tidb/pkg/server/server.go:737\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1650\ngithub.com/pingcap/errors.AddStack\n\t/root/go/pkg/mod/github.com/pingcap/errors@v0.11.5-0.20240318064555-6bd07397691f/errors.go:178\ngithub.com/pingcap/errors.Trace\n\t/root/go/pkg/mod/github.com/pingcap/errors@v0.11.5-0.20240318064555-6bd07397691f/juju_adaptor.go:15\ngithub.com/pingcap/tidb/pkg/executor.(*TiFlashSystemTableRetriever).dataForTiFlashSystemTables\n\t/workspace/source/tidb/pkg/executor/infoschema_reader.go:3085\ngithub.com/pingcap/tidb/pkg/executor.(*TiFlashSystemTableRetriever).retrieve\n\t/workspace/source/tidb/pkg/executor/infoschema_reader.go:3008\ngithub.com/pingcap/tidb/pkg/executor.(*MemTableReaderExec).Next\n\t/workspace/source/tidb/pkg/executor/memtable_reader.go:119\ngithub.com/pingcap/tidb/pkg/executor/internal/exec.Next\n\t/workspace/source/tidb/pkg/executor/internal/exec/executor.go:283\ngithub.com/pingcap/tidb/pkg/executor.(*LimitExec).Next\n\t/workspace/source/tidb/pkg/executor/executor.go:1369\ngithub.com/pingcap/tidb/pkg/executor/internal/exec.Next\n\t/workspace/source/tidb/pkg/executor/internal/exec/executor.go:283\ngithub.com/pingcap/tidb/pkg/executor.(*ExecStmt).next\n\t/workspace/source/tidb/pkg/executor/adapter.go:1216\ngithub.com/pingcap/tidb/pkg/executor.(*recordSet).Next\n\t/workspace/source/tidb/pkg/executor/adapter.go:156\ngithub.com/pingcap/tidb/pkg/server/internal/resultset.(*tidbResultSet).Next\n\t/workspace/source/tidb/pkg/server/internal/resultset/resultset.go:62\ngithub.com/pingcap/tidb/pkg/server.(*clientConn).writeChunks\n\t/workspace/source/tidb/pkg/server/conn.go:2290\ngithub.com/pingcap/tidb/pkg/server.(*clientConn).writeResultSet\n\t/workspace/source/tidb/pkg/server/conn.go:2233\ngithub.com/pingcap/tidb/pkg/server.(*clientConn).handleStmt\n\t/workspace/source/tidb/pkg/server/conn.go:2101\ngithub.com/pingcap/tidb/pkg/server.(*clientConn).handleQuery\n\t/workspace/source/tidb/pkg/server/conn.go:1838\ngithub.com/pingcap/tidb/pkg/server.(*clientConn).dispatch\n\t/workspace/source/tidb/pkg/server/conn.go:1325\ngithub.com/pingcap/tidb/pkg/server.(*clientConn).Run\n\t/workspace/source/tidb/pkg/server/conn.go:1098\ngithub.com/pingcap/tidb/pkg/server.(*Server).onConn\n\t/workspace/source/tidb/pkg/server/server.go:737\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1650"]