【 TiDB 使用环境】生产环境

【 TiDB 版本】7.1.0

【复现路径】无

【遇到的问题:问题现象及影响】

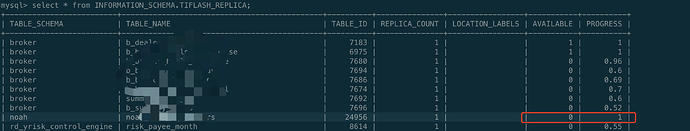

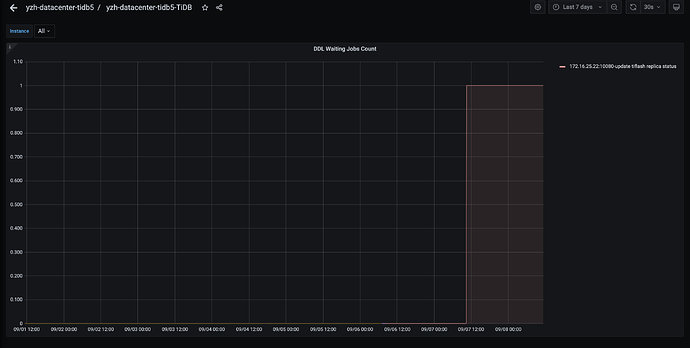

tiflash同步大量数据,卡住,连续1天基本没变化

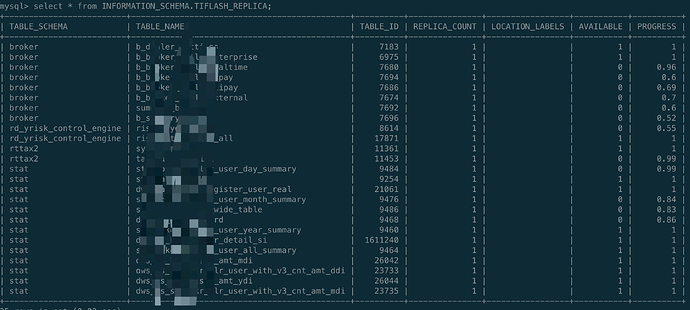

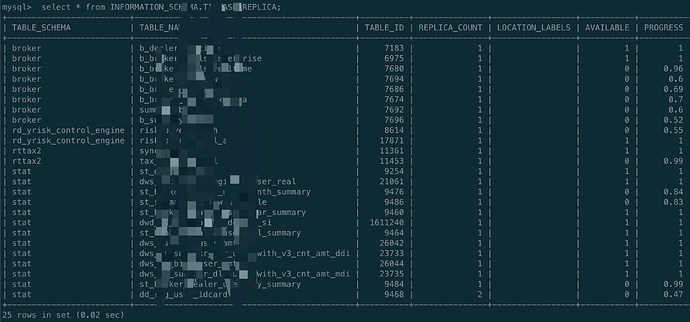

一天前

当前

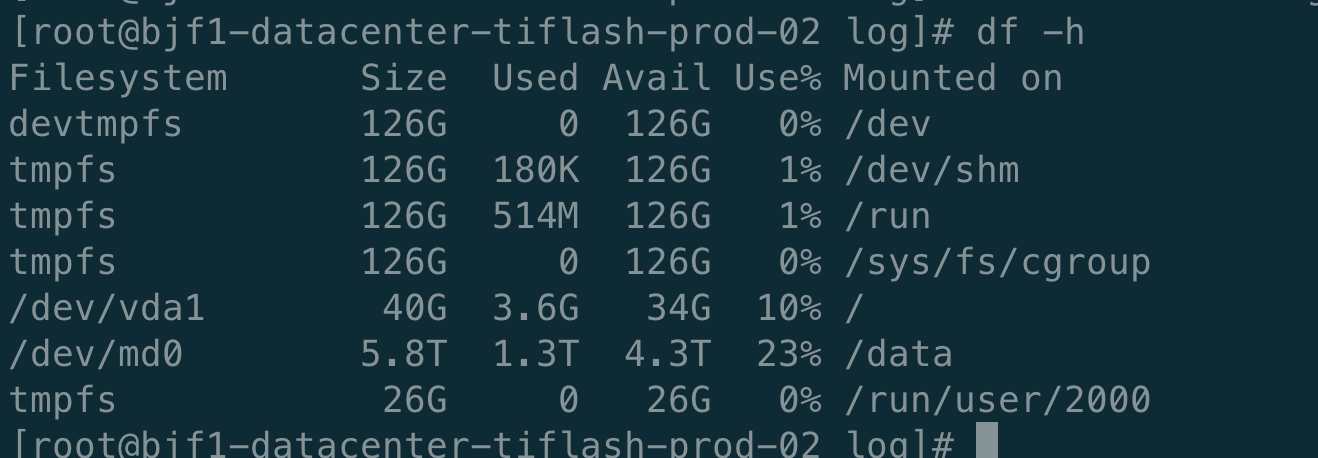

磁盘用量4块1.6T做的radi0

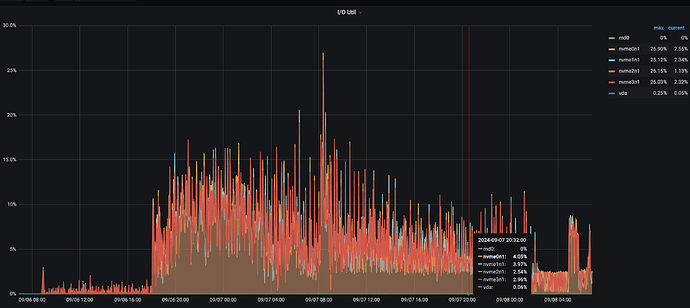

4块盘也有io写入

【附件:截图/日志/监控】

错误日志是偶发报错,总共出现2次

[2024/09/07 04:28:02.471 +08:00] [ERROR] [WriteBatchesImpl.h:72] ["!!!=========================Modifications in meta haven't persisted=========================!!! Stack trace: \n 0x735d82c\tDB::DM::WriteBatches::~WriteBatches()::'lambda'(DB::WriteBatchWrapper const&, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&)::operator()(DB::WriteBatchWrapper const&, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&) const [tiflash+120969260]\n \tdbms/src/Storages/DeltaMerge/WriteBatchesImpl.h:68\n 0x734bbe8\tDB::DM::WriteBatches::~WriteBatches() [tiflash+120896488]\n \tdbms/src/Storages/DeltaMerge/WriteBatchesImpl.h:77\n 0x738498e\tDB::DM::DeltaMergeStore::segmentMerge(DB::DM::DMContext&, std::__1::vector<std::__1::shared_ptr<DB::DM::Segment>, std::__1::allocator<std::__1::shared_ptr<DB::DM::Segment> > > const&, DB::DM::DeltaMergeStore::SegmentMergeReason) [tiflash+121129358]\n \tdbms/src/Storages/DeltaMerge/DeltaMergeStore_InternalSegment.cpp:344\n 0x737812c\tDB::DM::DeltaMergeStore::onSyncGc(long, DB::DM::GCOptions const&) [tiflash+121078060]\n \tdbms/src/Storages/DeltaMerge/DeltaMergeStore_InternalBg.cpp:889\n 0x8001376\tDB::GCManager::work() [tiflash+134222710]\n \tdbms/src/Storages/GCManager.cpp:105\n 0x7e20bab\tvoid* std::__1::__thread_proxy<std::__1::tuple<std::__1::unique_ptr<std::__1::__thread_struct, std::__1::default_delete<std::__1::__thread_struct> >, DB::BackgroundProcessingPool::BackgroundProcessingPool(int, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> >)::$_1> >(void*) [tiflash+132254635]\n \t/usr/local/bin/../include/c++/v1/thread:291\n 0x7fa43a8fbea5\tstart_thread [libpthread.so.0+32421]\n 0x7fa43a20ab0d\tclone [libc.so.6+1043213]"] [thread_id=19]

[2024/09/07 12:40:12.862 +08:00] [ERROR] [WriteBatchesImpl.h:72] ["!!!=========================Modifications in meta haven't persisted=========================!!! Stack trace: \n 0x735d82c\tDB::DM::WriteBatches::~WriteBatches()::'lambda'(DB::WriteBatchWrapper const&, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&)::operator()(DB::WriteBatchWrapper const&, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&) const [tiflash+120969260]\n \tdbms/src/Storages/DeltaMerge/WriteBatchesImpl.h:68\n 0x734bbe8\tDB::DM::WriteBatches::~WriteBatches() [tiflash+120896488]\n \tdbms/src/Storages/DeltaMerge/WriteBatchesImpl.h:77\n 0x738498e\tDB::DM::DeltaMergeStore::segmentMerge(DB::DM::DMContext&, std::__1::vector<std::__1::shared_ptr<DB::DM::Segment>, std::__1::allocator<std::__1::shared_ptr<DB::DM::Segment> > > const&, DB::DM::DeltaMergeStore::SegmentMergeReason) [tiflash+121129358]\n \tdbms/src/Storages/DeltaMerge/DeltaMergeStore_InternalSegment.cpp:344\n 0x737812c\tDB::DM::DeltaMergeStore::onSyncGc(long, DB::DM::GCOptions const&) [tiflash+121078060]\n \tdbms/src/Storages/DeltaMerge/DeltaMergeStore_InternalBg.cpp:889\n 0x8001376\tDB::GCManager::work() [tiflash+134222710]\n \tdbms/src/Storages/GCManager.cpp:105\n 0x7e20bab\tvoid* std::__1::__thread_proxy<std::__1::tuple<std::__1::unique_ptr<std::__1::__thread_struct, std::__1::default_delete<std::__1::__thread_struct> >, DB::BackgroundProcessingPool::BackgroundProcessingPool(int, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> >)::$_1> >(void*) [tiflash+132254635]\n \t/usr/local/bin/../include/c++/v1/thread:291\n 0x7fa43a8fbea5\tstart_thread [libpthread.so.0+32421]\n 0x7fa43a20ab0d\tclone [libc.so.6+1043213]"] [thread_id=10]