limits Fail soft limit of ‘nofile’ for user ‘tidb’ is not set or too low

limits Fail hard limit of ‘nofile’ for user ‘tidb’ is not set or too low

limits Fail soft limit of ‘stack’ for user ‘tidb’ is not set or too low

service Fail service irqbalance not found, should be installed and started

command Fail numactl not usable, bash: line 1: numactl: command not found

这种报错 影响吗

[2024/09/04 21:56:38.287 +08:00] [WARN] [endpoint.rs:823] [error-response] [err=“Region error (will back off and retry) message: "region 11938184 is missing" region_not_found { region_id: 11938184 }”]

[2024/09/04 21:56:38.365 +08:00] [WARN] [endpoint.rs:823] [error-response] [err=“Region error (will back off and retry) message: "region 11938184 is missing" region_not_found { region_id: 11938184 }”]

[2024/09/04 21:56:38.478 +08:00] [WARN] [endpoint.rs:823] [error-response] [err=“Region error (will back off and retry) message: "region 11938184 is missing" region_not_found { region_id: 11938184 }”]

[2024/09/04 21:56:38.603 +08:00] [WARN] [endpoint.rs:823] [error-response] [err=“Region error (will back off and retry) message: "region 11938184 is missing" region_not_found { region_id: 11938184 }”]

[2024/09/04 21:56:38.680 +08:00] [WARN] [endpoint.rs:823] [error-response] [err=“Region error (will back off and retry) message: "region 11938184 is missing" region_not_found { region_id: 11938184 }”]

[2024/09/04 21:56:38.970 +08:00] [WARN] [endpoint.rs:823] [error-response] [err=“Region error (will back off and retry) message: "region 11938184 is missing" region_not_found { region_id: 11938184 }”]

[2024/09/04 21:56:40.178 +08:00] [WARN] [endpoint.rs:823] [error-response] [err=“Key is locked (will clean up) primary_lock: 748000000000000C335F72800000000008C717 lock_version: 452318514340692125 key: 748000000000000C335F72800000000008C717 lock_ttl: 20001 txn_size: 1 lock_for_update_ts: 452318514340692125 use_async_commit: true min_commit_ts: 452318514419335288 secondaries: 748000000000000C335F6980000000000000010380000006A22044A2 secondaries: 748000000000000C335F6980000000000000020380000001EC025B81”]

[2024/09/04 21:56:40.184 +08:00] [WARN] [endpoint.rs:823] [error-response] [err=“Key is locked (will clean up) primary_lock: 748000000000000C335F72800000000008C717 lock_version: 452318514340692125 key: 748000000000000C335F72800000000008C717 lock_ttl: 20001 txn_size: 1 lock_for_update_ts: 452318514340692125 use_async_commit: true min_commit_ts: 452318514419335288 secondaries: 748000000000000C335F6980000000000000010380000006A22044A2 secondaries: 748000000000000C335F6980000000000000020380000001EC025B81”]

[2024/09/04 21:56:40.187 +08:00] [WARN] [endpoint.rs:823] [error-response] [err=“Key is locked (will clean up) primary_lock: 748000000000000C335F72800000000008C717 lock_version: 452318514340692125 key: 748000000000000C335F72800000000008C717 lock_ttl: 20001 txn_size: 1 lock_for_update_ts: 452318514340692125 use_async_commit: true min_commit_ts: 452318514419335288 secondaries: 748000000000000C335F6980000000000000010380000006A22044A2 secondaries: 748000000000000C335F6980000000000000020380000001EC025B81”]

[2024/09/04 21:56:54.015 +08:00] [WARN] [endpoint.rs:823] [error-response] [err=“Key is locked (will clean up) primary_lock: 748000000000000C335F728000000000084D73 lock_version: 452318517971386550 key: 748000000000000C335F728000000000084D73 lock_ttl: 20001 txn_size: 1 lock_for_update_ts: 452318517971386550 use_async_commit: true min_commit_ts: 452318518050029633 secondaries: 748000000000000C335F6980000000000000010380000004F188C70F secondaries: 748000000000000C335F69800000000000000203800000022411C289”]

[2024/09/04 21:57:05.095 +08:00] [WARN] [endpoint.rs:823] [error-response] [err=“Key is locked (will clean up) primary_lock: 748000000000000C335F728000000000084BD6 lock_version: 452318520868077652 key: 748000000000000C335F728000000000084BD6 lock_ttl: 20000 txn_size: 1 lock_for_update_ts: 452318520868077652 use_async_commit: true min_commit_ts: 452318520946983156 secondaries: 748000000000000C335F698000000000000001038000000432A146FD secondaries: 748000000000000C335F6980000000000000020380000000843B0EAB”] 这个是其中一个kv节点的报错

Database Backup <…> 0.00%

Database Backup <…> 0.00% 进度条就不走了

ctrl + c 停掉脚本 最后会有一个报错 Error: can not find a valid leader for key t

5_r: [BR:Backup:ErrBackupNoLeader]backup no leader

备份脚本的输出的日志文件中的报错也是比较明显的

[2024/09/04 22:03:18.859 +08:00] [ERROR] [main.go:60] [“br failed”] [error=“can not find a valid leader for key t\ufffd\u0000\u0000\u0000\u0000\u0000\u000b\ufffd5_r\u0003\ufffd\u0000\u0000\u0000\ufffd\u0000\ufffd\ufffd\u0003\ufffd\u0000\u0000\ufffd\u0000\u0000\u0000\u0000\u0001\u0003\ufffd\u0000\ufffd\u0000\u0000\u0000\u0000\u0001\ufffd\u0000\u0000\ufffd: [BR:Backup:ErrBackupNoLeader]backup no leader”] [errorVerbose=“[BR:Backup:ErrBackupNoLeader]backup no leader\ncan not find a valid leader for key t\ufffd\u0000\u0000\u0000\u0000\u0000\u000b\ufffd5_r\u0003\ufffd\u0000\u0000\u0000\ufffd\u0000\ufffd\ufffd\u0003\ufffd\u0000\u0000\ufffd\u0000\u0000\u0000\u0000\u0001\u0003\ufffd\u0000\ufffd\u0000\u0000\u0000\u0000\u0001\ufffd\u0000\u0000\ufffd\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).findTargetPeer\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:1025\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).handleFineGrained\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:1228\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).fineGrainedBackup.func2\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:1080\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1598”] [stack=“main.main\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/cmd/br/main.go:60\nruntime.main\n\t/usr/local/go/src/runtime/proc.go:250”]

这里说的这个key缺少leader ,到底是那个key,在这个文件中都是这种二进制的这种key的名字,没法找,会不会和 tikv节点的那个报错可以联系起来 [2024/09/04 21:57:05.095 +08:00] [WARN] [endpoint.rs:823] [error-response] [err=“Key is locked (will clean up) primary_lock: 748000000000000C335F728000000000084BD6 lock_version: 452318520868077652 key: 748000000000000C335F728000000000084BD6 lock_ttl: 20000 txn_size: 1 lock_for_update_ts: 452318520868077652 use_async_commit: true min_commit_ts: 452318520946983156 secondaries: 748000000000000C335F698000000000000001038000000432A146FD secondaries: 748000000000000C335F6980000000000000020380000000843B0EAB”]

br备份报ErrBackupNoLeader]backup no leader的错误 - #6,来自 zzzzzz 这个是另外一片类似文章给的提示 但是生产环境不敢随意操作 哈哈哈。一下搞不好跑路都没跑

新人表示看着好复杂的。

你是备份到aws里面吗?

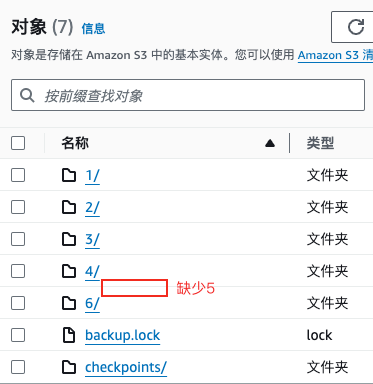

原来有人也报过类似的错

br backup full 报错 - TiDB 的问答社区 (asktug.com)

而且我看你的备份报错里面还有这个关键字

[err_code=KV:Unknown] [err=“Io(Custom { kind: Other, error: “failed to put object rusoto error Error during dispatch: error trying to connect: tcp connect error: Connection timed out (os error 110)” })”]

嗯 他的这篇我看了 我的是备份已经备份一部分东西到aws了 不可能存在权限不足的问题,备份到一半 数据上不去了, 我还创建其他桶 放开权限去验证了 不是他那个问题

现在就很矛盾,按照 小龙虾的指导命令检查后显示 All regions are healthy. 但是备份时候报错 [2024/09/05 17:43:32.124 +08:00] [ERROR] [main.go:60] [“br failed”] [error=“can not find a valid leader for key t\ufffd\u0000\u0000\u0000\u0000\u0000\u000b\ufffd5_r\u0003\ufffd\u0000\u0000\u0000\ufffd\u0000\ufffd\ufffd\ufffd\u0003\ufffd\u0000\u0000\ufffd\u0000\u0000\u0000\u0000\ufffd\u0003\ufffd\u0000\ufffd\u0000\u0000\u0000\u0000\u0001\ufffd\u0000\u0000\ufffd: [BR:Backup:ErrBackupNoLeader]backup no leader”] [errorVerbose=“[BR:Backup:ErrBackupNoLeader]backup no leader\ncan not find a valid leader for key t\ufffd\u0000\u0000\u0000\u0000\u0000\u000b\ufffd5_r\u0003\ufffd\u0000\u0000\u0000\ufffd\u0000\ufffd\ufffd\ufffd\u0003\ufffd\u0000\u0000\ufffd\u0000\u0000\u0000\u0000\ufffd\u0003\ufffd\u0000\ufffd\u0000\u0000\u0000\u0000\u0001\ufffd\u0000\u0000\ufffd\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).findTargetPeer\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:1025\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).handleFineGrained\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:1228\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).fineGrainedBackup.func2\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:1080\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1598”] [stack=“main.main\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/cmd/br/main.go:60\nruntime.main\n\t/usr/local/go/src/runtime/proc.go:250”]

再用pdctl 再看看有没有没leader 的问题,看看监控备份的时候有没有掉 leader

缺认 AWS S3 存储桶的权限和网络连接没有问题,对吗?

集群现在都状态,有可能手动自平衡吗?

先备份一个小表试下s3有没有问题呢,怎么感觉像s3连通性问题呢,这region error应该是有锁的正常日志,最好发一下你备份的命令

tiup br backup db

–db “${database}”

–pd “${PDIP}:2379”

–storage “s3://${Bucket}/${Folder}?access-key=${accessKey}&secret-access-key=${secretAccessKey}”

–s3.region “${region}”

–send-credentials-to-tikv=true

–ratelimit 128

–log-file backuptable.log

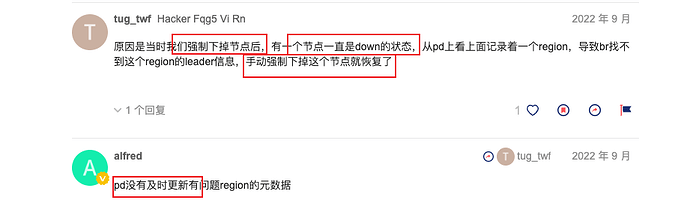

这个是正常的备份的 是有5这个目录,并且 1-6中都含有大量的sst结尾文件

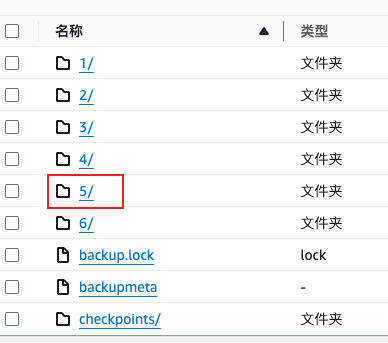

这个是不正常的备份 缺少5目录,缺少 backupmeta文件,并且

如果你备份小数据能正常备份的话说明s3没问题的,但正常也不应该有锁啊,你试下–backupts ‘2022-09-08 13:30:00 +08:00’ ,快照备份,快照时间换成你要备份的时间看能不能成

稍等 我试着备份一张表