【 TiDB 使用环境】生产环境 /测试/ Poc

【 TiDB 版本】

【复现路径】做过哪些操作出现的问题

【遇到的问题:问题现象及影响】

【资源配置】进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

【附件:截图/日志/监控】

使用 tiup bench 创建测试数据,没有等创建完成就执行了 ctrl +c,然后在数据库drop了表(此处可能有问题,先执行了 ctrl+c,然后drop 表,ctrl+c 那边可能还没结束)。

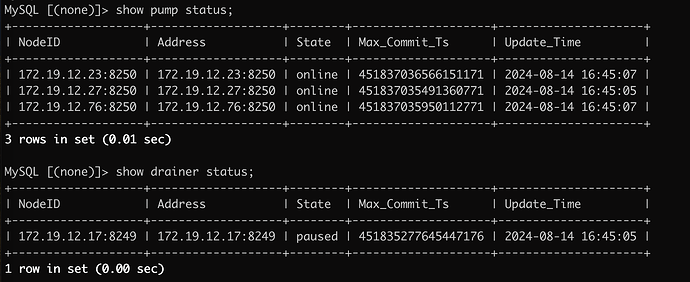

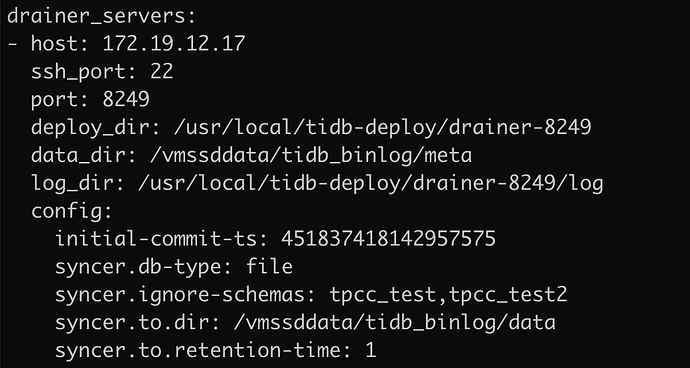

drainer 报错信息:

[2024/08/14 16:35:04.713 +09:00] [INFO] [base_client.go:398] ["[pd] cannot update member from this address"] [address=http://172.19.12.23:2379] [error="[PD:client:ErrClientGetMember]error:rpc error: code = Canceled desc = context canceled target:172.19.12.23:2379 status:READY: error:rpc error: code = Canceled desc = context canceled target:172.19.12.23:2379 status:READY"]

[2024/08/14 16:35:04.713 +09:00] [ERROR] [base_client.go:203] ["[pd] failed to update member"] [error="[PD:client:ErrClientGetMember]error:rpc error: code = Canceled desc = context canceled target:172.19.12.23:2379 status:READY: error:rpc error: code = Canceled desc = context canceled target:172.19.12.23:2379 status:READY"] [errorVerbose="[PD:client:ErrClientGetMember]error:rpc error: code = Canceled desc = context canceled target:172.19.12.23:2379 status:READY: error:rpc error: code = Canceled desc = context canceled target:172.19.12.23:2379 status:READY\ngithub.com/tikv/pd/client.(*pdBaseClient).updateMember\n\t/root/go/pkg/mod/github.com/tikv/pd/client@v0.0.0-20230301094509-c82b237672a0/base_client.go:403\ngithub.com/tikv/pd/client.(*pdBaseClient).memberLoop\n\t/root/go/pkg/mod/github.com/tikv/pd/client@v0.0.0-20230301094509-c82b237672a0/base_client.go:202\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1650"]

[2024/08/14 16:35:04.714 +09:00] [INFO] [checkpoint.go:69] ["initialize checkpoint"] [type=file] [checkpoint=451835277645447176] [version=3418] [cfg="{\"CheckpointType\":\"file\",\"Db\":null,\"Schema\":\"\",\"Table\":\"\",\"ClusterID\":7399966587537109512,\"InitialCommitTS\":451835277357088772,\"dir\":\"/vmssddata/tidb_binlog/meta/savepoint\"}"]

[2024/08/14 16:35:04.714 +09:00] [INFO] [store.go:76] ["new store"] [path="tikv://172.19.12.23:2379,172.19.12.27:2379,172.19.12.76:2379?disableGC=true"]

[2024/08/14 16:35:04.714 +09:00] [INFO] [client.go:289] ["[pd] create pd client with endpoints"] [pd-address="[172.19.12.23:2379,172.19.12.27:2379,172.19.12.76:2379]"]

[2024/08/14 16:35:04.723 +09:00] [INFO] [base_client.go:487] ["[pd] switch leader"] [new-leader=http://172.19.12.27:2379] [old-leader=]

[2024/08/14 16:35:04.723 +09:00] [INFO] [base_client.go:164] ["[pd] init cluster id"] [cluster-id=7399966587537109512]

[2024/08/14 16:35:04.723 +09:00] [INFO] [tso_request_dispatcher.go:287] ["[pd/tso] tso dispatcher created"] [dc-location=global]

[2024/08/14 16:35:04.724 +09:00] [INFO] [tikv_driver.go:191] ["using API V1."]

[2024/08/14 16:35:04.726 +09:00] [INFO] [store.go:82] ["new store with retry success"]

[2024/08/14 16:35:06.015 +09:00] [INFO] [binlogger.go:93] ["open binlogger"] [directory=/vmssddata/tidb_binlog/data]

[2024/08/14 16:35:06.016 +09:00] [INFO] [binlogger.go:140] ["open and lock binlog file"] [name=/vmssddata/tidb_binlog/data/binlog-0000000000000000-20240813175139]

[2024/08/14 16:35:06.016 +09:00] [INFO] [tso_request_dispatcher.go:368] ["[pd/tso] stop fetching the pending tso requests due to context canceled"] [dc-location=global]

[2024/08/14 16:35:06.016 +09:00] [INFO] [tso_request_dispatcher.go:305] ["[pd/tso] exit tso dispatcher"] [dc-location=global]

[2024/08/14 16:35:06.017 +09:00] [INFO] [store.go:76] ["new store"] [path="tikv://172.19.12.23:2379,172.19.12.27:2379,172.19.12.76:2379?disableGC=true"]

[2024/08/14 16:35:06.017 +09:00] [INFO] [client.go:289] ["[pd] create pd client with endpoints"] [pd-address="[172.19.12.23:2379,172.19.12.27:2379,172.19.12.76:2379]"]

[2024/08/14 16:35:06.026 +09:00] [INFO] [base_client.go:487] ["[pd] switch leader"] [new-leader=http://172.19.12.27:2379] [old-leader=]

[2024/08/14 16:35:06.026 +09:00] [INFO] [base_client.go:164] ["[pd] init cluster id"] [cluster-id=7399966587537109512]

[2024/08/14 16:35:06.026 +09:00] [INFO] [tso_request_dispatcher.go:287] ["[pd/tso] tso dispatcher created"] [dc-location=global]

[2024/08/14 16:35:06.027 +09:00] [INFO] [tikv_driver.go:191] ["using API V1."]

[2024/08/14 16:35:06.027 +09:00] [INFO] [store.go:82] ["new store with retry success"]

[2024/08/14 16:35:06.033 +09:00] [INFO] [server.go:270] ["register success"] ["drainer node id"=172.19.12.17:8249]

[2024/08/14 16:35:06.034 +09:00] [INFO] [server.go:349] ["start to server request"] [addr=http://172.19.12.17:8249]

[2024/08/14 16:35:06.035 +09:00] [INFO] [merge.go:222] ["merger add source"] ["source id"=172.19.12.23:8250]

[2024/08/14 16:35:06.035 +09:00] [INFO] [merge.go:222] ["merger add source"] ["source id"=172.19.12.27:8250]

[2024/08/14 16:35:06.035 +09:00] [INFO] [merge.go:222] ["merger add source"] ["source id"=172.19.12.76:8250]

[2024/08/14 16:35:06.035 +09:00] [INFO] [pump.go:140] ["pump create pull binlogs client"] [id=172.19.12.23:8250]

[2024/08/14 16:35:06.035 +09:00] [INFO] [pump.go:140] ["pump create pull binlogs client"] [id=172.19.12.76:8250]

[2024/08/14 16:35:06.035 +09:00] [INFO] [pump.go:140] ["pump create pull binlogs client"] [id=172.19.12.27:8250]

[2024/08/14 16:35:06.040 +09:00] [INFO] [schema.go:316] ["skip unsupported DDL job"] [job="ID:4138, Type:create resource group, State:synced, SchemaState:public, SchemaID:4137, TableID:0, RowCount:0, ArgLen:0, start time: 2024-08-14 14:47:17.247 +0900 JST, Err:<nil>, ErrCount:0, SnapshotVersion:0"]

[2024/08/14 16:35:06.041 +09:00] [INFO] [schema.go:316] ["skip unsupported DDL job"] [job="ID:4140, Type:create resource group, State:synced, SchemaState:public, SchemaID:4139, TableID:0, RowCount:0, ArgLen:0, start time: 2024-08-14 14:47:25.097 +0900 JST, Err:<nil>, ErrCount:0, SnapshotVersion:0"]

[2024/08/14 16:35:07.030 +09:00] [INFO] [pb.go:62] ["Binlog GC loop stopped"]

[2024/08/14 16:35:07.030 +09:00] [INFO] [syncer.go:271] ["handleSuccess quit"]

[2024/08/14 16:35:07.030 +09:00] [ERROR] [server.go:311] ["syncer exited abnormal"] [error="skipDMLEvent failed: not found table id: 4159"] [errorVerbose="not found table id: 4159\ngithub.com/pingcap/tidb-binlog/drainer.skipDMLEvent\n\t/workspace/source/tidb-binlog/drainer/syncer.go:601\ngithub.com/pingcap/tidb-binlog/drainer.(*Syncer).run\n\t/workspace/source/tidb-binlog/drainer/syncer.go:412\ngithub.com/pingcap/tidb-binlog/drainer.(*Syncer).Start\n\t/workspace/source/tidb-binlog/drainer/syncer.go:152\ngithub.com/pingcap/tidb-binlog/drainer.(*Server).Start.func5\n\t/workspace/source/tidb-binlog/drainer/server.go:310\ngithub.com/pingcap/tidb-binlog/drainer.(*taskGroup).start.func1\n\t/workspace/source/tidb-binlog/drainer/util.go:82\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1650\nskipDMLEvent failed"]

[2024/08/14 16:35:07.030 +09:00] [INFO] [util.go:79] [Exit] [name=syncer]

tidb 日志:

[2024/08/14 14:52:00.865 +09:00] [INFO] [ddl.go:1212] ["start DDL job"] [category=ddl] [job="ID:4160, Type:create table, State:queueing, SchemaState:none, SchemaID:4141, TableID:4159, RowCount:0, ArgLen:2, start time: 2024-08-14 14:52:00.847 +0900 JST, Err:<nil>, ErrCount:0, SnapshotVersion:0, LocalMode: false"] [query="CREATE TABLE IF NOT EXISTS stock (\n\ts_i_id INT NOT NULL,\n\ts_w_id INT NOT NULL,\n\ts_quantity INT,\n\ts_dist_01 CHAR(24), \n\ts_dist_02 CHAR(24),\n\ts_dist_03 CHAR(24),\n\ts_dist_04 CHAR(24), \n\ts_dist_05 CHAR(24), \n\ts_dist_06 CHAR(24), \n\ts_dist_07 CHAR(24), \n\ts_dist_08 CHAR(24), \n\ts_dist_09 CHAR(24), \n\ts_dist_10 CHAR(24), \n\ts_ytd INT, \n\ts_order_cnt INT, \n\ts_remote_cnt INT,\n\ts_data VARCHAR(50),\n\tPRIMARY KEY(s_w_id, s_i_id) /*T![clustered_index] CLUSTERED */\n)"]

[2024/08/14 14:52:00.930 +09:00] [INFO] [ddl_worker.go:747] ["finish DDL job"] [worker="worker 5, tp general"] [category=ddl] [jobID=4160] [conn=2281701504] [job="ID:4160, Type:create table, State:synced, SchemaState:public, SchemaID:4141, TableID:4159, RowCount:0, ArgLen:0, start time: 2024-08-14 14:52:00.847 +0900 JST, Err:<nil>, ErrCount:0, SnapshotVersion:0, LocalMode: false"]

[2024/08/14 14:53:16.932 +09:00] [INFO] [ddl.go:1212] ["start DDL job"] [category=ddl] [job="ID:4163, Type:drop schema, State:queueing, SchemaState:public, SchemaID:4141, TableID:0, RowCount:0, ArgLen:1, start time: 2024-08-14 14:53:16.897 +0900 JST, Err:<nil>, ErrCount:0, SnapshotVersion:0, LocalMode: false"] [query="drop database tpcc_test"]

[2024/08/14 14:53:19.517 +09:00] [WARN] [session.go:834] ["commit failed"] [conn=2281701576] [session_alias=] ["finished txn"="Txn{state=invalid}"] [error="[schema:1146]Table 'tpcc_test.stock' doesn't exist"]

[2024/08/14 14:53:32.401 +09:00] [WARN] [session.go:808] [sql] [conn=2281701614] [session_alias=] [label=general] [error="[kv:8022]Error: KV error safe to retry Error(Txn(Error(Mvcc(Error(TxnLockNotFound { start_ts: TimeStamp(451835267933011977), commit_ts: TimeStamp(451835275417223174), key: [116, 128, 0, 0, 0, 0, 0, 16, 63, 95, 114, 3, 128, 0, 0, 0, 0, 0, 0, 10, 3, 128, 0, 0, 0, 0, 0, 4, 1] }))))) {tableID=4159, handle={10, 1025}} [try again later]"] [txn="Txn{state=invalid}"]

[2024/08/14 14:53:32.401 +09:00] [WARN] [session.go:834] ["commit failed"] [conn=2281701614] [session_alias=] ["finished txn"="Txn{state=invalid}"] [error="[schema:1146]Table 'tpcc_test.stock' doesn't exist"]

[2024/08/14 15:05:13.656 +09:00] [INFO] [gc.go:284] ["remove stats in GC due to dropped table"] [table_id=4159]

像这种情况如何处理?如何跳过错误?

有了解的大神给支支招。谢谢。