把这个删掉再试下呢

不是这个的问题,这里的问题就是br没有用环境变量中的AK/SK导致的

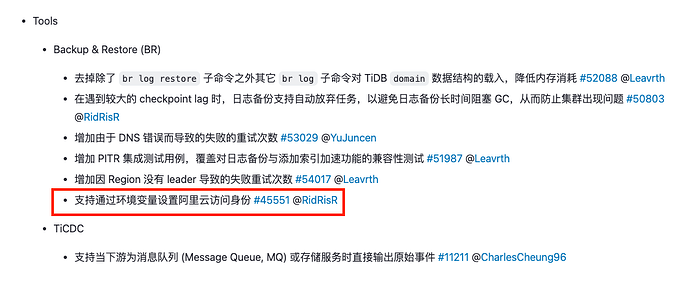

问题原因: br backup to aliyum OSS not support ak/sk as env · Issue #45551 · pingcap/tidb · GitHub ,等新版本修复,或者用下面的 TiUP BR + prefix AK/SK 绕过

简单测试了下:

v8.1.0 TiUP BR + ENV AK/SK 报错 Alibaba RAM Provider Retrieve

br: fix backup to aliyum OSS not support ak/sk as env (#54150) by ti-chi-bot · Pull Request #54994 · pingcap/tidb · GitHub 还没合并,估计等 v8.1.1

br: fix backup to aliyum OSS not support ak/sk as env (#54150) by ti-chi-bot · Pull Request #54579 · pingcap/tidb · GitHub 上周刚合并,估计会在 v7.5.3v8.1.0 TiUP BR + prefix 里 AK/SK 可以成功,写法类似

–storage “s3://brtest20240730/tidb?access-key=…&secret-access-key=…”v8.1.0 BR + v1.6.0 TiDB-Operator 报错 Alibaba RAM Provider Retrieve ,原因同上,secret 走的是 ENV 逻辑

v8.1.0 BR + v1.6.0 TiDB-Operator + prefix 里 AK/SK 报错 Get backup metadata for backup files in s3://… failed

报错出处 tidb-operator/cmd/backup-manager/app/backup/manager.go at v1.6.0 · pingcap/tidb-operator · GitHub , tidb-backup-manager 没有处理这类情况

相关 issue: How to use Backup CR to backup tidb to aliyun OSS? · Issue #5611 · pingcap/tidb-operator · GitHub 暂时没有优化计划

使用 release-7.5 分支重新编译的br并打包docker镜像上传私库,在配置Backup Resource时设置 .spec.toolImage 为私有仓库指定br镜像后,能正常执行backup 到 OSS

这里的问题就是br没有用环境变量中的AK/SK导致的

这个确实是,我之前来提过一个 BUG 来着,一直还没修复。。。。

如图所示,本来是支持通过AWS_ACCESS_KEY_ID,AWS_SECRET_ACCESS_KEY两个环境变量来读取 AK/SK 的,但是7.5.1貌似已经不支持了? [image]

prefix: "tidb-backup"

在非 tidb on k8s 模式下,可以通过s3://mysql-dts-migrate/import-sort-dir?access-key=${KEY}&secret-access-key=${SECRET}这种方式直接把 AK 写入到 URL 中,来跳过这个 BUG,通过 depolyment 来部署看起来是把配置参数分开了,可以多尝试几次。

不过如果按照原来的逻辑,应该是加在 prefix 的后面,也就是要变成:

prefix: "tidb-backup?access-key=${KEY}&secret-access-key=${SECRET}"

简单测试了下:

v8.1.0 TiUP BR + ENV AK/SK 报错 Alibaba RAM Provider Retrieve

br: fix backup to aliyum OSS not support ak/sk as env (#54150) by ti-chi-bot · Pull Request #54994 · pingcap/tidb · GitHub 还没合并,估计等 v8.1.1

br: fix backup to aliyum OSS not support ak/sk as env (#54150) by ti-chi-bot · Pull Request #54579 · pingcap/tidb · GitHub 上周刚合并,估计会在 v7.5.3

上面老哥已经提到上周有人提出的pr中解决了在provider是alibaba的情况下,没有使用到环境变量中AK/SK的问题了。我也拉下来自行编译测试了

大佬,请问如果我想在goland中断点调试代码的话,如何操作呢

刚注意到已经修复了。不过7.5.3预计在08-05就出来了,可以等一等,到时候直接升级一下TiDB 集群,7.5.0在 Placement Rule 还是有些 BUG 的。

如果不想升级集群,BR 和 TiDB 集群的版本依赖性并不大,其实也可以只升级 BR 的,就是不知道 tidb on k8s 支不支持只升级某些组件。

从备份到的OSS中执行restore时,也未获取到AK/SK,并且报错Alibaba RAM Provider Retrieve: Get “http://100.100.100.200/latest/meta-data/ram/security-credentials/”: dial tcp 100.100.100.200:80: i/o timeout

通过本地源码编译获取到的br镜像执行

---

kind: Restore

apiVersion: pingcap.com/v1alpha1

metadata:

name: restore

namespace: tidb-backup

spec:

toolImage: harbor.swartz.cn/library/tidb-br:8.1.0

br:

cluster: tidb-test

clusterNamespace: tidb-backup

sendCredToTikv: true

s3:

provider: "alibaba"

secretName: "tidb-s3-secret"

region: "cn-shanghai"

endpoint: "https://oss-cn-shanghai.aliyuncs.com"

bucket: "52-ur04b61fp"

prefix: "tidb-backup"

如果在服务器直接执行br的restore命令时,会直接报错

Error: context deadline exceeded

br restore full \

--pd "${PDIP}:2379" \

--storage "s3://${Bucket}/${Folder}" \

--s3.region "${region}" \

--ratelimit 128 \

--send-credentials-to-tikv=true \

--log-file restorefull.log

现在是可以备份不能恢复数据

restorefull.log

看一下日志文件中的完整报错内容呢?

shihh:

restorefull.log看一下日志文件中的完整报错内容呢?

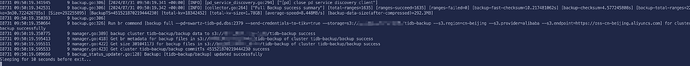

[2024/08/01 13:40:01.853 +08:00] [INFO] [info.go:49] ["Welcome to Backup & Restore (BR)"] [release-version=v7.5.2-71-gd2304c82f7-dirty] [git-hash=d2304c82f7c4ffd0d4e4346c30f66dd593938ce7] [git-branch=release-7.5] [go-version=go1.21.6] [utc-build-time="2024-08-01 02:14:13"] [race-enabled=false]

[2024/08/01 13:40:01.854 +08:00] [INFO] [common.go:755] [arguments] [__command="br restore full"] [log-file=restorefull.log] [pd="[tidb-test-pd:2379]"] [s3.endpoint=https://oss-cn-beijing.aliyuncs.com] [s3.provider=alibaba] [s3.region=cn-beijing] [send-credentials-to-tikv=true] [storage=s3://52-ur04b61fp/tidb-backup]

[2024/08/01 13:40:01.856 +08:00] [INFO] [common.go:180] ["trying to connect to etcd"] [addr="[tidb-test-pd:2379]"]

[2024/08/01 13:40:06.859 +08:00] [ERROR] [restore.go:64] ["failed to restore"] [error="context deadline exceeded"] [stack="main.runRestoreCommand\n\t/Users/shihh/code/opensource/tidb/br/cmd/br/restore.go:64\nmain.newFullRestoreCommand.func1\n\t/Users/shihh/code/opensource/tidb/br/cmd/br/restore.go:169\ngithub.com/spf13/cobra.(*Command).execute\n\t/Users/shihh/go/pkg/mod/github.com/spf13/cobra@v1.7.0/command.go:940\ngithub.com/spf13/cobra.(*Command).ExecuteC\n\t/Users/shihh/go/pkg/mod/github.com/spf13/cobra@v1.7.0/command.go:1068\ngithub.com/spf13/cobra.(*Command).Execute\n\t/Users/shihh/go/pkg/mod/github.com/spf13/cobra@v1.7.0/command.go:992\nmain.main\n\t/Users/shihh/code/opensource/tidb/br/cmd/br/main.go:58\nruntime.main\n\t/usr/local/go/src/runtime/proc.go:267"]

[2024/08/01 13:40:06.861 +08:00] [ERROR] [main.go:60] ["br failed"] [error="context deadline exceeded"] [errorVerbose="context deadline exceeded\ngithub.com/pingcap/errors.AddStack\n\t/Users/shihh/go/pkg/mod/github.com/pingcap/errors@v0.11.5-0.20240318064555-6bd07397691f/errors.go:178\ngithub.com/pingcap/errors.Trace\n\t/Users/shihh/go/pkg/mod/github.com/pingcap/errors@v0.11.5-0.20240318064555-6bd07397691f/juju_adaptor.go:15\nmain.runRestoreCommand\n\t/Users/shihh/code/opensource/tidb/br/cmd/br/restore.go:66\nmain.newFullRestoreCommand.func1\n\t/Users/shihh/code/opensource/tidb/br/cmd/br/restore.go:169\ngithub.com/spf13/cobra.(*Command).execute\n\t/Users/shihh/go/pkg/mod/github.com/spf13/cobra@v1.7.0/command.go:940\ngithub.com/spf13/cobra.(*Command).ExecuteC\n\t/Users/shihh/go/pkg/mod/github.com/spf13/cobra@v1.7.0/command.go:1068\ngithub.com/spf13/cobra.(*Command).Execute\n\t/Users/shihh/go/pkg/mod/github.com/spf13/cobra@v1.7.0/command.go:992\nmain.main\n\t/Users/shihh/code/opensource/tidb/br/cmd/br/main.go:58\nruntime.main\n\t/usr/local/go/src/runtime/proc.go:267\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_arm64.s:1197"] [stack="main.main\n\t/Users/shihh/code/opensource/tidb/br/cmd/br/main.go:60\nruntime.main\n\t/usr/local/go/src/runtime/proc.go:267"]

自建机房应该启动一个minio 而不是用oss

我们自建的机房没那么稳定,怕minio崩了

7.5.3 发版了,看起来已经修复了,可以试下。

此话题已在最后回复的 60 天后被自动关闭。不再允许新回复。