May 19 09:50:02 host5024 systemd: Started Session 18470 of user root.

May 19 10:00:01 host5024 systemd: Started Session 18471 of user root.

May 19 10:01:01 host5024 systemd: Started Session 18472 of user root.

May 19 10:10:01 host5024 systemd: Started Session 18473 of user root.

May 19 10:20:01 host5024 systemd: Started Session 18474 of user root.

May 19 10:30:01 host5024 systemd: Started Session 18475 of user root.

May 19 10:40:01 host5024 systemd: Started Session 18476 of user root.

May 19 10:50:01 host5024 systemd: Started Session 18477 of user root.

May 19 11:00:01 host5024 systemd: Started Session 18478 of user root.

May 19 11:01:01 host5024 systemd: Started Session 18479 of user root.

May 19 11:10:01 host5024 systemd: Started Session 18480 of user root.

May 19 11:20:01 host5024 systemd: Started Session 18481 of user root.

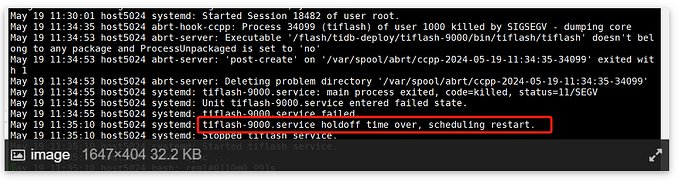

May 19 11:26:40 host5024 kernel: PDLeaderLoop[23438]: segfault at 30 ip 00000000091555c8 sp 00007f0b91dadeb0 error 4

May 19 11:26:40 host5024 abrt-hook-ccpp: Process 23325 (tiflash) of user 1000 killed by SIGSEGV - dumping core

麻烦问下,使用什么命令把tiflash拉起来 ?

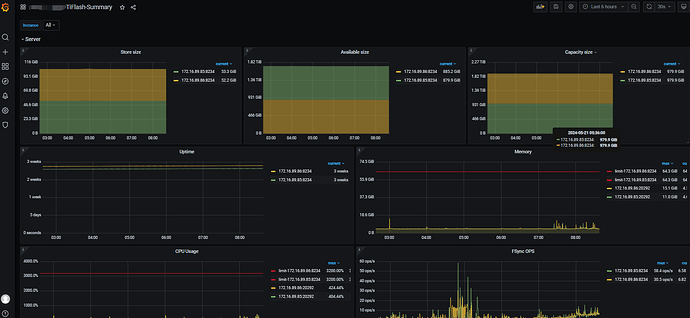

55.25保留这台tiflash,55.24上面和tikv混部的下架掉(tiup),把tiflash副本改成1个。

我还有一个问题,就是我查下提示有问题的服务器上,查看flash服务,是 运行状态 ,查下进程也在 ,telnet也能进去 。这个是什么情况。

副本数 1

/flash/tidb-data/tiflash-9000/metadata/db_161619/t_124444.sql 文件不存在

类似这个提示 ,在正常的tiflash节点中的错误日志中也有

在这个tiflash 下有个自己的启动脚本script 下有个 run_**.sh的脚本,启动下这个脚本,看看会有什么日志打印出来,

tiup cluster start tidb-yunfengkong --node 192.168.55.24:9000

执行/flash/tidb-deploy/tiflash-9000/scripts/run_tiflash.sh 这个 后系统日志提示

May 20 17:45:37 host5024 systemd: Stopped tiflash service.

May 20 17:45:37 host5024 systemd: Started tiflash service.

May 20 17:45:38 host5024 bash: sync …

May 20 17:45:38 host5024 bash: real#0110m0.216s

May 20 17:45:38 host5024 bash: user#0110m0.000s

May 20 17:45:38 host5024 bash: sys#0110m0.014s

May 20 17:45:38 host5024 bash: ok

May 20 17:45:38 host5024 bash: arg matches is ArgMatches { args: {“engine-version”: MatchedArg { occurs: 1, indices: [4], vals: [“v7.5.1”] }, “engine-git-hash”: MatchedArg { occurs: 1, indices: [6], vals: [“9970e492dfdf2b4bee487fef4b27fee66131531f”] }, “config”: MatchedArg { occurs: 1, indices: [2], vals: [“/flash/tidb-deploy/tiflash-9000/conf/tiflash-learner.toml”] }, “pd-endpoints”: MatchedArg { occurs: 1, indices: [8, 9, 10], vals: [“192.168.55.22:2379”, “192.168.55.23:2379”, “192.168.55.25:2379”] }, “engine-label”: MatchedArg { occurs: 1, indices: [12], vals: [“tiflash”] }, “engine-addr”: MatchedArg { occurs: 1, indices: [14], vals: [“192.168.55.24:3930”] }}, subcommand: None, usage: Some(“USAGE:\n TiFlash Proxy [FLAGS] [OPTIONS] --engine-git-hash --engine-label --engine-version ”) }

May 20 17:46:58 host5024 abrt-hook-ccpp: Process 39329 (tiflash) of user 1000 killed by SIGSEGV - dumping core

May 20 17:47:13 host5024 abrt-server: Executable ‘/flash/tidb-deploy/tiflash-9000/bin/tiflash/tiflash’ doesn’t belong to any package and ProcessUnpackaged is set to ‘no’

May 20 17:47:13 host5024 abrt-server: ‘post-create’ on ‘/var/spool/abrt/ccpp-2024-05-20-17:46:58-39329’ exited with 1

May 20 17:47:13 host5024 abrt-server: Deleting problem directory ‘/var/spool/abrt/ccpp-2024-05-20-17:46:58-39329’

May 20 17:47:14 host5024 systemd-logind: New session 18753 of user root.

一直重复以上内容

附件中为tiflash_error.log 中输出的内容

error1.log (1.8 MB)

对,尝试了,拉不起来

试了, 提示

Starting instance 192.168.55.24:9000

Error: failed to start tiflash: failed to start: 192.168.55.24 tiflash-9000.service, please check the instance’s log(/flash/tidb-deploy/tiflash-9000/log) for more detail.: timed out waiting for tiflash 192.168.55.24:9000 to be ready after 120s: tiflash store status is ‘Ready’, not fully running yet

Verbose debug logs has been written to /home/tidb/.tiup/logs/tiup-cluster-debug-2024-05-20-17-46-38.log.

tiup-cluster-debug-2024-05-20-17-46-38.log (121.5 KB)

这个看了么?

把tiflash的表都删除完了 把tiflash下线。 再添加一个flash node 再配表

实在不行,下点Tiflash,需要的话再增加节点

看到这个我想起来以前遇到过的一次,就是服务器资源不足导致服务启动缓慢,虽然120s报错,但是服务实际还在启动中,过了5分钟之后再看集群状态,服务已经起来了。不过我那次也是刚好启动之后有别的事情耽误了,才发现虽然报错了,但是启动了,之前是一直不断重启,导致一直以为服务有问题启动不起来。

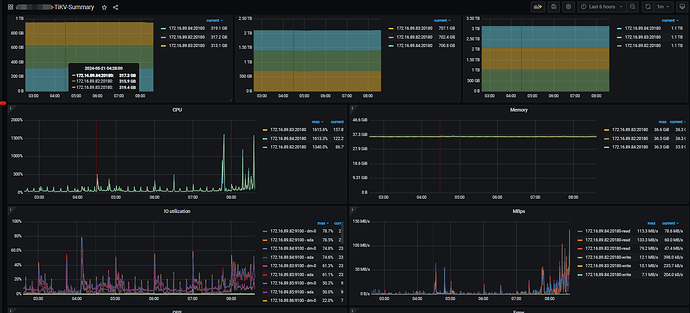

可能还是资源问题吧

是的,当时是加了资源解决的。

tiup cluster stop tidb-yunfengkong --node 192.168.55.24:9000

先关闭55.24上的TiFLASH,等这个节点的内存使用恢复到40%-50%后,再尝试启动TiFLASH

tiup cluster START tidb-yunfengkong --node 192.168.55.24:9000

专栏 - TiDB 集群 TiKV 节点内存占用较高问题排查 | TiDB 社区 TiDB 集群 TiKV 节点内存占用较高问题排查