![]() 看来不支持追踪DDL,那改成 insert into语句

看来不支持追踪DDL,那改成 insert into语句

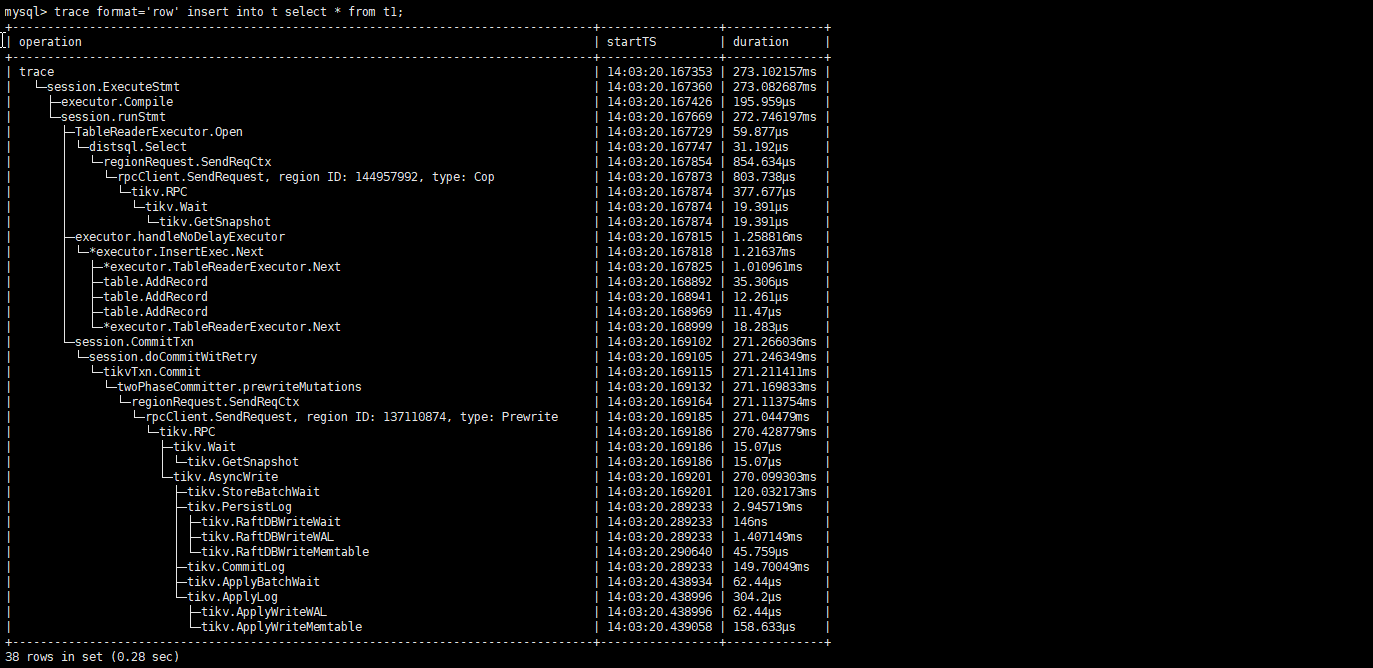

mysql> trace format=‘row’ insert into t select * from t1;

±----------------------------------------------------------------------------------±----------------±-------------+

| operation | startTS | duration |

±----------------------------------------------------------------------------------±----------------±-------------+

| trace | 14:03:20.167353 | 273.102157ms |

| └─session.ExecuteStmt | 14:03:20.167360 | 273.082687ms |

| ├─executor.Compile | 14:03:20.167426 | 195.959?s |

| └─session.runStmt | 14:03:20.167669 | 272.746197ms |

| ├─TableReaderExecutor.Open | 14:03:20.167729 | 59.877?s |

| │ └─distsql.Select | 14:03:20.167747 | 31.192?s |

| │ └─regionRequest.SendReqCtx | 14:03:20.167854 | 854.634?s |

| │ └─rpcClient.SendRequest, region ID: 144957992, type: Cop | 14:03:20.167873 | 803.738?s |

| │ └─tikv.RPC | 14:03:20.167874 | 377.677?s |

| │ └─tikv.Wait | 14:03:20.167874 | 19.391?s |

| │ └─tikv.GetSnapshot | 14:03:20.167874 | 19.391?s |

| ├─executor.handleNoDelayExecutor | 14:03:20.167815 | 1.258816ms |

| │ └─executor.InsertExec.Next | 14:03:20.167818 | 1.21637ms |

| │ ├─executor.TableReaderExecutor.Next | 14:03:20.167825 | 1.010961ms |

| │ ├─table.AddRecord | 14:03:20.168892 | 35.306?s |

| │ ├─table.AddRecord | 14:03:20.168941 | 12.261?s |

| │ ├─table.AddRecord | 14:03:20.168969 | 11.47?s |

| │ └─*executor.TableReaderExecutor.Next | 14:03:20.168999 | 18.283?s |

| └─session.CommitTxn | 14:03:20.169102 | 271.266036ms |

| └─session.doCommitWitRetry | 14:03:20.169105 | 271.246349ms |

| └─tikvTxn.Commit | 14:03:20.169115 | 271.211411ms |

| └─twoPhaseCommitter.prewriteMutations | 14:03:20.169132 | 271.169833ms |

| └─regionRequest.SendReqCtx | 14:03:20.169164 | 271.113754ms |

| └─rpcClient.SendRequest, region ID: 137110874, type: Prewrite | 14:03:20.169185 | 271.04479ms |

| └─tikv.RPC | 14:03:20.169186 | 270.428779ms |

| ├─tikv.Wait | 14:03:20.169186 | 15.07?s |

| │ └─tikv.GetSnapshot | 14:03:20.169186 | 15.07?s |

| └─tikv.AsyncWrite | 14:03:20.169201 | 270.099303ms |

| ├─tikv.StoreBatchWait | 14:03:20.169201 | 120.032173ms |

| ├─tikv.PersistLog | 14:03:20.289233 | 2.945719ms |

| │ ├─tikv.RaftDBWriteWait | 14:03:20.289233 | 146ns |

| │ ├─tikv.RaftDBWriteWAL | 14:03:20.289233 | 1.407149ms |

| │ └─tikv.RaftDBWriteMemtable | 14:03:20.290640 | 45.759?s |

| ├─tikv.CommitLog | 14:03:20.289233 | 149.70049ms |

| ├─tikv.ApplyBatchWait | 14:03:20.438934 | 62.44?s |

| └─tikv.ApplyLog | 14:03:20.438996 | 304.2?s |

| ├─tikv.ApplyWriteWAL | 14:03:20.438996 | 62.44?s |

| └─tikv.ApplyWriteMemtable | 14:03:20.439058 | 158.633?s |

±----------------------------------------------------------------------------------±----------------±-------------+

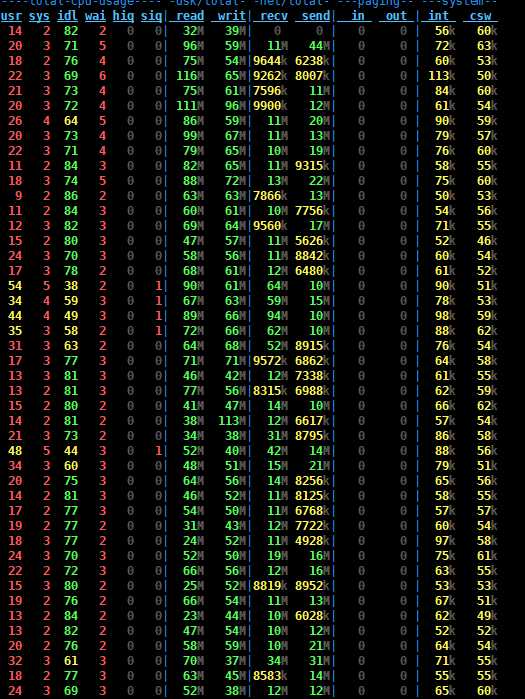

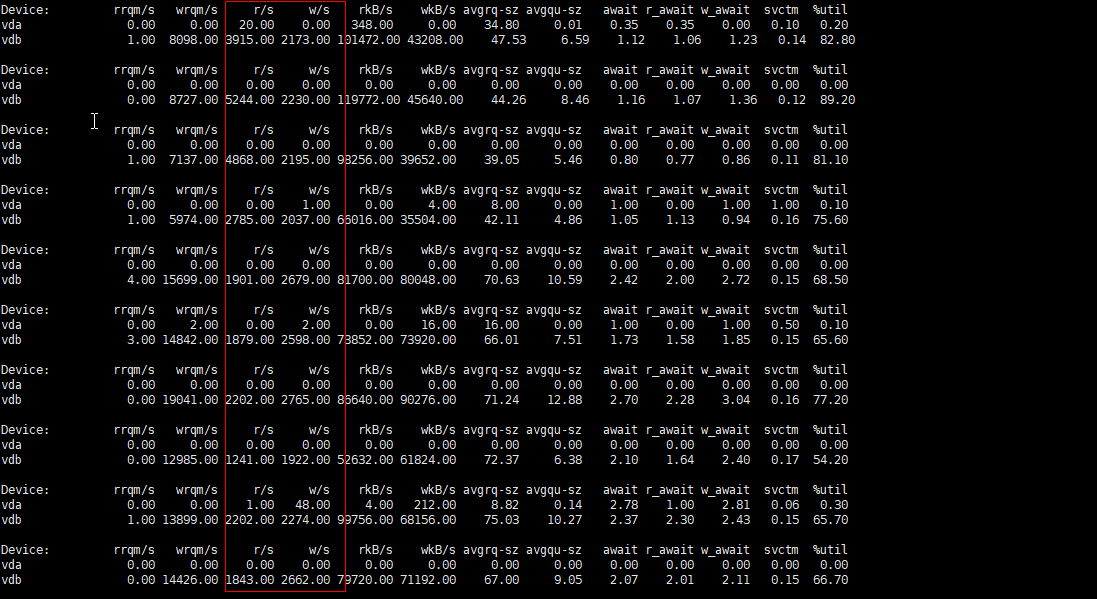

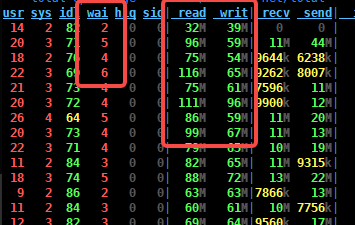

tikvasyncwrite慢。是要看下网络和磁盘。

为什么 tikv.commitlog 和 tikv.StoreBatchWait 这么高?上百ms了

把集群重启下 ?

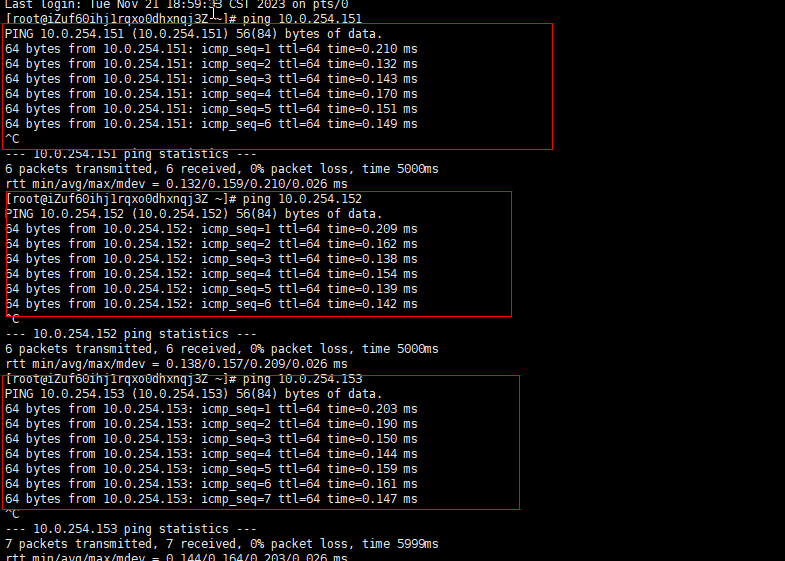

你试试从pd节点 ping tikv节点 ,看看延迟

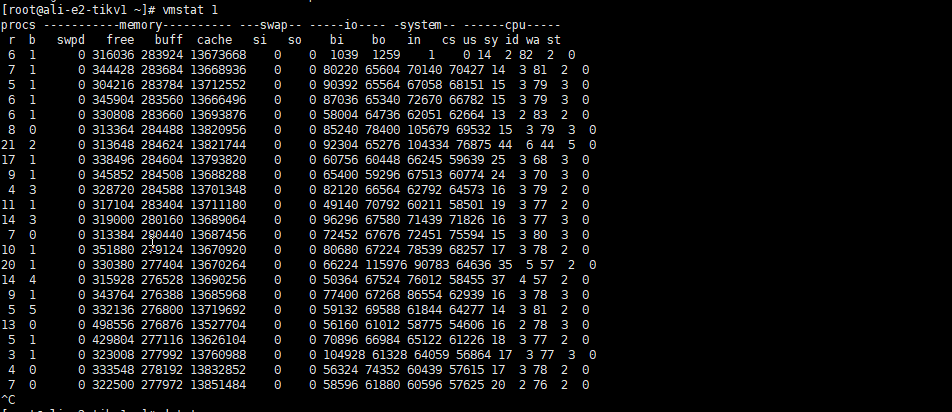

另外输出个vmstat或dstat的截图

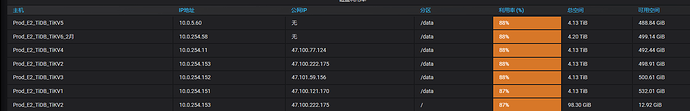

你的存储达到瓶颈了 ![]()

不可能吧

用fio测下文件系统的IO,4k 、32k 和 128k ,顺序读和写都测下

测系统盘还是数据盘啊

一般都是硬盘性能有问题

数据盘(tikv用的文件系统)

测试环境的话,可以停一个tikv测下;

生产环境就再买块单独的盘挂上去测下,测完释放了