可以,稍等

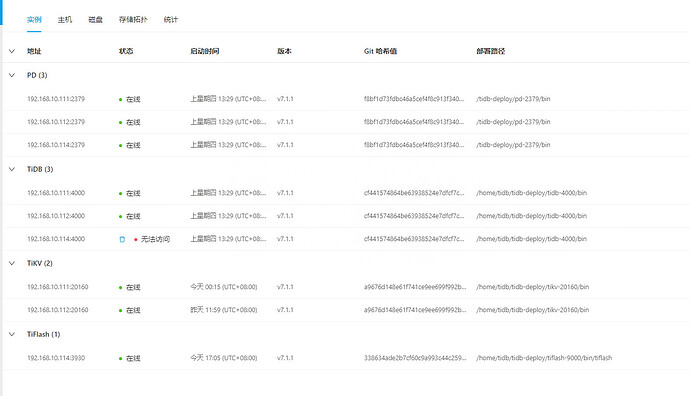

用jdbc连的tidb,只连了一个tidb节点

tiflash直接崩了 ![]()

那台tidb节点已经手动停掉了

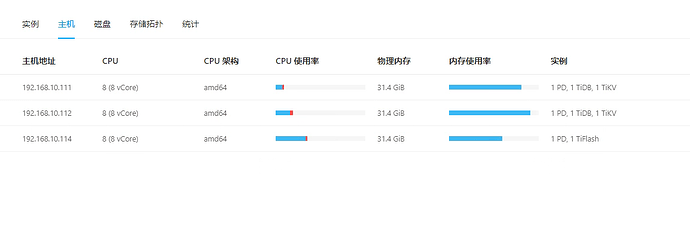

SHOW config WHERE NAME LIKE ‘%replication.max-replicas%’;你集群安装的时候就是这样啊?你现在tikv几副本?你的tiflash机器上的tidb什么时候挂掉的

tikv两副本万一坏一台集群就挂了,我建议可以2个tidb节点3个tikv节点,这两个角色换一换

你的几台主机有上NTP吗?

[2023/11/16 15:50:15.859 +08:00] [WARN] [tso.go:289] ["clock offset"] [jet-lag=240.75812ms] [prev-physical=2023/11/16 15:50:15.618 +08:00] [now=2023/11/16 15:50:15.859 +08:00] [update-physical-interval=50ms]

[2023/11/16 15:50:21.836 +08:00] [WARN] [tso.go:289] ["clock offset"] [jet-lag=217.844908ms] [prev-physical=2023/11/16 15:50:21.618 +08:00] [now=2023/11/16 15:50:21.836 +08:00] [update-physical-interval=50ms]

日志上看不出tiflash 在 2023/11/16 15:06:36.321 重启的原因 ![]()

[2023/11/16 15:06:10.342 +08:00] [DEBUG] [SegmentReadTaskPool.cpp:164] ["getInputStream succ, read_mode=Bitmap, pool_id=3 segment_id=101"] [source="MPP<query:<query_ts:1700118318750049354, local_query_id:7714, server_id:242317, start_ts:445675816542011401>,task_id:1> table_id=88"] [thread_id=9]

[2023/11/16 15:06:11.164 +08:00] [DEBUG] [StableValueSpace.cpp:446] ["max_data_version: 445675816542011401, enable_handle_clean_read: true, is_fast_mode: true, enable_del_clean_read: true"] [source="keyspace_id=4294967295 table_id=88 segment_id=1 epoch=3"] [thread_id=3]

[2023/11/16 15:06:11.164 +08:00] [DEBUG] [DMFilePackFilter.h:224] ["RSFilter exclude rate: 100.00, after_pk: 80, after_read_packs: 80, after_filter: 0, handle_ranges: {[-9223372036854775808,658931)}, read_packs: 0, pack_count: 80"] [source="MPP<query:<query_ts:1700118318750049354, local_query_id:7714, server_id:242317, start_ts:445675816542011401>,task_id:3> table_id=88"] [thread_id=3]

[2023/11/16 15:06:15.264 +08:00] [DEBUG] [Segment.cpp:2130] ["Begin segment getReadInfo"] [source="keyspace_id=4294967295 table_id=88 segment_id=101 epoch=13 MPP<query:<query_ts:1700118318750049354, local_query_id:7714, server_id:242317, start_ts:445675816542011401>,task_id:3> table_id=88"] [thread_id=9]

[2023/11/16 15:06:36.321 +08:00] [DEBUG] [Segment.cpp:2145] ["Finish segment getReadInfo"] [source="keyspace_id=4294967295 table_id=88 segment_id=101 epoch=13 MPP<query:<query_ts:1700118318750049354, local_query_id:7714, server_id:242317, start_ts:445675816542011401>,task_id:3> table_id=88"] [thread_id=9]

**[2023/11/16 15:07:02.673 +08:00] [INFO] [BaseDaemon.cpp:1172] ["Welcome to TiFlash"] [thread_id=1]**

[2023/11/16 15:07:02.674 +08:00] [INFO] [BaseDaemon.cpp:1173] ["Starting daemon with revision 54381"] [thread_id=1]

[2023/11/16 15:07:02.674 +08:00] [INFO] [BaseDaemon.cpp:1176] ["TiFlash build info: TiFlash\nRelease Version: v7.1.1\nEdition: Community\nGit Commit Hash: 338634ade2b7cf60c9a993c44c259bfe69b9ad7b\nGit Branch: heads/refs/tags/v7.1.1\nUTC Build Time: 2023-07-13 09:56:39\nEnable Features: jemalloc sm4(GmSSL) avx2 avx512 unwind thinlto\nProfile: RELWITHDEBINFO\n"] [thread_id=1]

[2023/11/16 15:07:02.674 +08:00] [INFO] [<unknown>] ["starting up"] [source=Application] [thread_id=1]

[2023/11/16 15:07:02.675 +08:00] [INFO] [Server.cpp:418] ["Got jemalloc version: 5.3-RC"] [thread_id=1]

[2023/11/16 15:07:02.675 +08:00] [INFO] [Server.cpp:427] ["Not found environment variable MALLOC_CONF"] [thread_id=1]

[2023/11/16 15:07:02.675 +08:00] [INFO] [Server.cpp:433] ["Got jemalloc config: opt.background_thread false, opt.max_background_threads 4"] [thread_id=1]

[2023/11/16 15:07:02.675 +08:00] [INFO] [Server.cpp:437] ["Try to use background_thread of jemalloc to handle purging asynchronously"] [thread_id=1]

[2023/11/16 15:07:02.675 +08:00] [INFO] [Server.cpp:440] ["Set jemalloc.max_background_threads 1"] [thread_id=1]

[2023/11/16 15:07:02.675 +08:00] [INFO] [Server.cpp:443] ["Set jemalloc.background_thread true"] [thread_id=1]

只能让原厂大佬帮忙看看了 ![]()

tiflash就一个节点,tiflash节点上的tidb,我手动停掉了

有的 ![]()

![]() 只能找原厂的了

只能找原厂的了

使用如下命令单独启动114节点的tidb试试能否启动,tiup cluster start ${cluster-name} -N 192.168.10.114:4000

我手动关掉,可以启动的

嗯,那可以先手动启动后观察下情况,看看是否出现同样的错误

好的 ![]()

混合部署容易出问题

资源紧张,只能混合部署了 ![]()