tidb 得单独部署不然pd oom很好 数据也没了

吧业务先停吧 不然数据会丢 你pd已经挂了

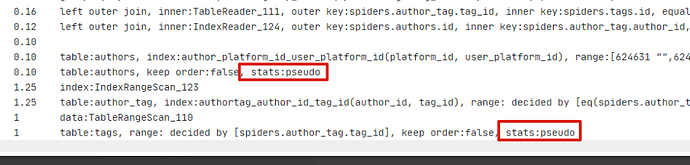

这sql的完整执行计划贴一下,看sql不应该消耗那么多资源的

只是这1台OOM,PD数据没问题,不是所有tidb节点都oom

这个统计信息不准确,说明了什么问题?有啥影响

比如实际表有10万,统计信息是100万,那执行计划就会按照100万来走了。

我刚刚执行了一次analyze table,然后再explain我的SQL,状态还是stats:pseudo

MySQL [spiders]> analyze table authors;

Query OK, 0 rows affected (2 min 17.07 sec)

MySQL [spiders]>

MySQL [spiders]> SHOW ANALYZE STATUS;

+--------------+------------+----------------+---------------------------------------------------+----------------+---------------------+---------------------+----------+

| Table_schema | Table_name | Partition_name | Job_info | Processed_rows | Start_time | End_time | State |

+--------------+------------+----------------+---------------------------------------------------+----------------+---------------------+---------------------+----------+

| spiders | authors | | analyze index author_created_time | 69070360 | 2023-09-20 18:37:15 | 2023-09-20 18:37:48 | finished |

| spiders | authors | | analyze index author_platform_id_user_id | 69070362 | 2023-09-20 18:37:15 | 2023-09-20 18:38:05 | finished |

| spiders | authors | | analyze index author_platform_id | 69070360 | 2023-09-20 18:37:48 | 2023-09-20 18:38:22 | finished |

| spiders | authors | | analyze index author_updated_time | 69070362 | 2023-09-20 18:37:15 | 2023-09-20 18:38:26 | finished |

| spiders | authors | | analyze columns | 69070362 | 2023-09-20 18:37:15 | 2023-09-20 18:38:52 | finished |

| spiders | authors | | analyze index author_crawl_level | 69070360 | 2023-09-20 18:38:22 | 2023-09-20 18:38:55 | finished |

| spiders | authors | | analyze index author_user_id | 69070360 | 2023-09-20 18:38:05 | 2023-09-20 18:38:58 | finished |

| spiders | authors | | analyze index author_platform_id_crawl_level | 69070361 | 2023-09-20 18:38:26 | 2023-09-20 18:39:03 | finished |

| spiders | authors | | analyze index author_platform_id_user_platform_id | 69070360 | 2023-09-20 18:38:49 | 2023-09-20 18:39:32 | finished |

+--------------+------------+----------------+---------------------------------------------------+----------------+---------------------+---------------------+----------+

9 rows in set (0.00 sec)

统计信息不准就很慢

分析可以参考这里的思路

能不能先将author_tag 和tag 的group by 完后再去和authors join 呢? 从执行计划来看group by 是在tidb 上对所有join 完的数据进行聚合的,这些数据占用内存。针对这个sql, authors 其实可以不参与group by。最好能将group by 下推到tikv或者是用tiflash。

1 个赞

都部署在一台上了?

进入到 TiDB Dashboard -集群信息 (Cluster Info) -主机(Hosts) 截图此页面

发一下你的配置看一看

看看free -h

优化sql吧