【 TiDB 使用环境】测试

【 TiDB 版本】v5.4.0

【复现路径】通过flink +TIKV Client 写入数据较慢

【遇到的问题:问题现象及影响】 现在遇到 tidb 写瓶颈,想通过tikv 两阶段提交 来优化写

使用flink Stream API 开发 自定义Sink :

两阶段提交代码如下可参考:

public void KVSet(TiSession session, @Nonnull List pairs) {

System.out.println("最后要进行put .size() = " + pairs.size());

Iterator iterator = pairs.iterator();

if (!iterator.hasNext()) {

return;

}

twoPhaseCommitter = new TwoPhaseCommitter(session, session.getTimestamp().getVersion());

BytePairWrapper primaryPair = iterator.next();

try {

twoPhaseCommitter.prewritePrimaryKey(

ConcreteBackOffer.newCustomBackOff(2000),

primaryPair.getKey(),

primaryPair.getValue());

if (iterator.hasNext()) {

twoPhaseCommitter.prewriteSecondaryKeys(

primaryPair.getKey(), iterator, 2000);

}

twoPhaseCommitter.commitPrimaryKey(

ConcreteBackOffer.newCustomBackOff(1000),

primaryPair.getKey(),

session.getTimestamp().getVersion());

System.out.println("这里提交了两阶段提交");

} catch (Throwable ignored) {

System.out.println("这里出现异常");

ignored.printStackTrace();

} finally {

try {

twoPhaseCommitter.close();

} catch (Exception e) {

System.out.println("这里又出现异常");

e.printStackTrace();

}

}

}

写入测试 后的数据记录:

| 条数 | 时间s |

|---|---|

| 14127 | 114 |

| 255 | 2 |

| 1 | 0 |

| 13623 | 97 |

| 330 | 2 |

| 1 | 0 |

| 27644 | 194 |

| 441 | 3 |

| 1 | 0 |

| 26576 | 201 |

| 7309 | 56 |

| 511 | 4 |

| 1 | 0 |

| 25397 | 205 |

| 156 | 1 |

| 1 | 0 |

| 13105 | 98 |

| 471 | 3 |

| 1 | 0 |

| 1152 | 9 |

| 25517 | 206 |

| 359 | 2 |

| 1 | 0 |

| 54 | 0 |

| 20234 | 156 |

| 574 | 4 |

| 1 | 0 |

| 19090 | 153 |

| 39 | 0 |

| 6347 | 46 |

| 464 | 3 |

| 1 | 0 |

| 16867 | 129 |

| 280 | 2 |

| 1 | 0 |

| 23832 | 179 |

| 529 | 4 |

| 1 | 0 |

| 30000 | 228 |

| 6101 | 49 |

| 212 | 1 |

| 1 | 0 |

| 22112 | 180 |

| 1 | 0 |

| 20835 | 167 |

| 331 | 2 |

| 1 | 0 |

| 23988 | 193 |

| 192 | 1 |

| 1 | 0 |

| 6216 | 50 |

| 105 | 0 |

| 1 | 0 |

| 24433 | 197 |

| 68 | 0 |

| 1619 | 13 |

| 35 | 0 |

| 18363 | 148 |

| 415 | 3 |

| 1 | 0 |

| 3944 | 31 |

| 248 | 1 |

| 1 | 0 |

| 2351 | 18 |

| 411 | 3 |

| 1 | 0 |

| 17074 | 124 |

| 419 | 3 |

| 1 | 0 |

| 1780 | 14 |

| 290 | 2 |

| 1 | 0 |

| 8375 | 67 |

| 35 | 0 |

| 1 | 0 |

| 23907 | 194 |

| 296 | 2 |

| 1 | 0 |

| 23032 | 182 |

| 400 | 3 |

| 1 | 0 |

| 10695 | 86 |

| 557 | 4 |

| 1 | 0 |

| 8764 | 57 |

| 488 | 3 |

| 1 | 0 |

| 1451 | 11 |

| 279 | 2 |

| 1 | 0 |

| 15296 | 107 |

| 306 | 2 |

| 1 | 0 |

| 7985 | 57 |

| 556 | 4 |

| 1 | 0 |

| 19561 | 143 |

| 230 | 1 |

| 1 | 0 |

| 11910 | 91 |

| 6 | 0 |

| 59 | 0 |

| 17334 | 131 |

| 232 | 1 |

| 1 | 0 |

| 10884 | 76 |

| 133 | 0 |

| 1 | 0 |

| 29803 | 230 |

| 64 | 0 |

| 1 | 0 |

| 27620 | 209 |

| 283 | 2 |

| 1 | 0 |

| 26211 | 196 |

| 288 | 2 |

| 1 | 0 |

| 609 | 4 |

| 72 | 0 |

| 1 | 0 |

| 13061 | 100 |

| 161 | 1 |

| 1 | 0 |

| 8906 | 72 |

| 396 | 3 |

| 1 | 0 |

| 9419 | 74 |

| 346 | 2 |

| 1 | 0 |

| 12576 | 101 |

| 227 | 2 |

| 1 | 0 |

| 1087 | 8 |

| 6608 | 53 |

| 2 | 0 |

| 11010 | 88 |

| 473 | 3 |

| 1 | 0 |

| 18498 | 150 |

| 593 | 4 |

| 1 | 0 |

| 4146 | 33 |

| 456 | 3 |

| 1 | 0 |

| 16378 | 126 |

| 394 | 3 |

| 1 | 0 |

| 1547 | 12 |

| 45 | 0 |

| 13211 | 101 |

| 226 | 1 |

| 1 | 0 |

| 25327 | 191 |

| 420 | 3 |

| 1 | 0 |

| 25971 | 196 |

| 91 | 0 |

| 1 | 0 |

| 16258 | 120 |

| 48 | 0 |

| 2 | 0 |

| 20119 | 153 |

| 213 | 1 |

| 1 | 0 |

| 1451 | 11 |

| 1 | 0 |

| 20958 | 159 |

| 297 | 2 |

| 2 | 0 |

| 14308 | 109 |

| 39 | 0 |

| 2852 | 22 |

| 447 | 3 |

| 1 | 0 |

| 2269 | 17 |

| 368 | 2 |

| 1 | 0 |

| 16736 | 126 |

| 130 | 1 |

| 1 | 0 |

| 18719 | 150 |

| 71 | 0 |

| 1 | 0 |

| 16420 | 132 |

| 476 | 3 |

| 2 | 0 |

平均 每秒 130.2条/s

写入设置:最大批次 写入条数 30000条,最大 批次 时间1s

同时我也进行了 最大批次 3000 条数据,最大批次时间1s

结果也是差不多;

我写的方式是单个task ,单线程写入,完全不及JDBC ,大失所望 ![]()

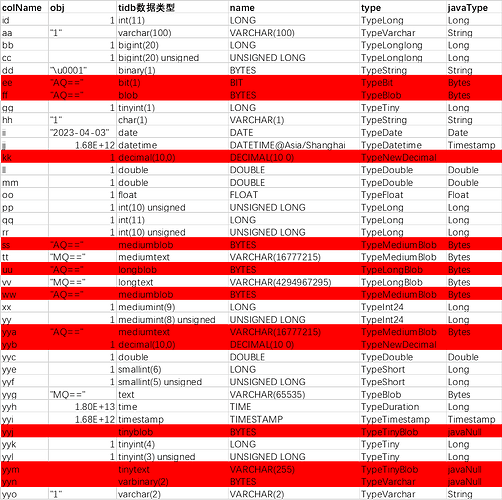

这里再分享一下自己汇总的 tidb Column.type 和java 数据类型的转化对比 type

最后一个疑问: 我们通过tidb JDBC 写入数据在底层实现也是 tikv kvClient put /get /delete 实现的么?是我自己写的方式需要优化么?