我自己测试了下,不过是7.0版本的,也是很慢,测试如下:

mysql> select version();

+--------------------+

| version() |

+--------------------+

| 5.7.25-TiDB-v7.0.0 |

+--------------------+

1 row in set (0.00 sec)

mysql> explain analyze select * from customer order by C_ADDRESS desc;

+-------------------------+-------------+----------+-----------+----------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+---------------------------------+-----------+---------+

| id | estRows | actRows | task | access object | execution info | operator info | memory | disk |

+-------------------------+-------------+----------+-----------+----------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+---------------------------------+-----------+---------+

| Sort_4 | 15000000.00 | 15000000 | root | | time:11m31.8s, loops:14650, RRU:94859.635554, WRU:0.000000 | tpch100.customer.c_address:desc | 1011.0 MB | 3.09 GB |

| └─TableReader_8 | 15000000.00 | 15000000 | root | | time:17.4s, loops:14686, cop_task: {num: 565, max: 2.18s, min: 2.13ms, avg: 430.5ms, p95: 1.3s, max_proc_keys: 50144, p95_proc_keys: 50144, tot_proc: 2m24.4s, tot_wait: 1.22s, rpc_num: 565, rpc_time: 4m3.2s, copr_cache_hit_ratio: 0.00, build_task_duration: 17.4ms, max_distsql_concurrency: 15} | data:TableFullScan_7 | 180.3 MB | N/A |

| └─TableFullScan_7 | 15000000.00 | 15000000 | cop[tikv] | table:customer | tikv_task:{proc max:1.86s, min:1ms, avg: 302.2ms, p80:559ms, p95:916ms, iters:16879, tasks:565}, scan_detail: {total_process_keys: 15000000, total_process_keys_size: 3052270577, total_keys: 15000565, get_snapshot_time: 262.7ms, rocksdb: {key_skipped_count: 15000000, block: {cache_hit_count: 5046, read_count: 49981, read_byte: 1.13 GB, read_time: 32.1s}}} | keep order:false | N/A | N/A |

+-------------------------+-------------+----------+-----------+----------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+---------------------------------+-----------+---------+

3 rows in set (11 min 32.36 sec)

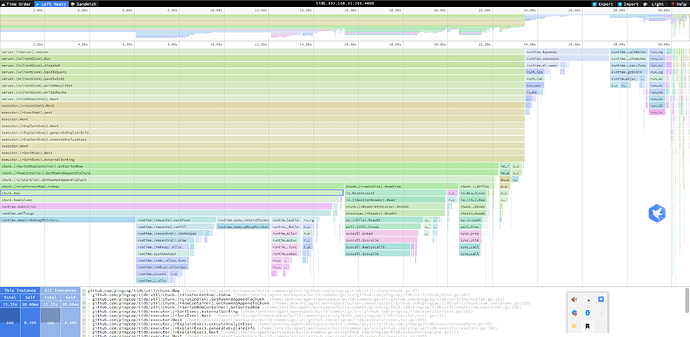

看时间真正排序花费时间很短,主要时间用在1、从磁盘中读取数据时候chunk.(*rowInDisk).ReadFrom(一直有的问题);2、chunk.New函数这里,最终主要是因为用完对象后调用runtime.mallocgc占用大量的CPU时间。

因此不知道是不是相比于4.x版本在从磁盘中读取数据到内存新创建chunk的时候发生了变化。这里看着感觉像是chunk数据返回给客户端后需要进行gc花费了很多时间,如果能采用类似于arena的技术手动大块(连续多个chunk)回收不知道效果上是否会更好。