5个表,数据比较大,有做tiflash副本,执行时报错:Error Code: 1105. rpc error: code = Unavailable desc = error reading from server: EOF 22.359 sec

查询时cpu和内存使用率比较低(服务器:32C+128G)

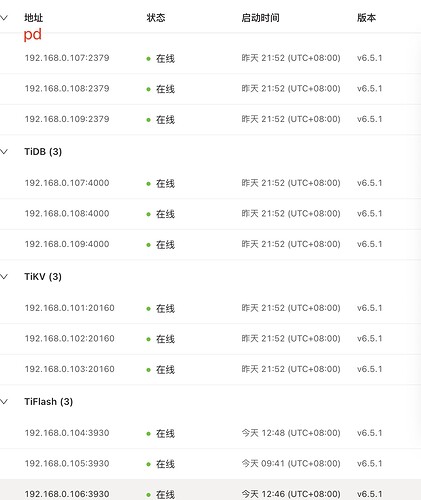

dashboard查看tiflash日志:

2023-03-20 09:36:54 (UTC+08:00)

TiFlash 192.168.0.105:3930

[kv.rs:671] [“KvService::batch_raft send response fail”] [err=RemoteStopped]

2023-03-20 09:36:54 (UTC+08:00)

TiFlash 192.168.0.105:3930

[raft_client.rs:562] [“connection aborted”] [addr=192.168.0.106:20170] [receiver_err=“Some(RpcFailure(RpcStatus { code: 14-UNAVAILABLE, message: "Socket closed", details: [] }))”] [sink_error=“Some(RpcFinished(Some(RpcStatus { code: 14-UNAVAILABLE, message: "Socket closed", details: [] })))”] [store_id=152]

2023-03-20 09:36:54 (UTC+08:00)

TiFlash 192.168.0.105:3930

[raft_client.rs:858] [“connection abort”] [addr=192.168.0.106:20170] [store_id=152]

2023-03-20 09:36:54 (UTC+08:00)

TiFlash 192.168.0.104:3930

[kv.rs:671] [“KvService::batch_raft send response fail”] [err=RemoteStopped]

2023-03-20 09:36:54 (UTC+08:00)

TiFlash 192.168.0.104:3930

[raft_client.rs:562] [“connection aborted”] [addr=192.168.0.106:20170] [receiver_err=“Some(RpcFailure(RpcStatus { code: 14-UNAVAILABLE, message: "Socket closed", details: [] }))”] [sink_error=“Some(RpcFinished(Some(RpcStatus { code: 14-UNAVAILABLE, message: "Socket closed", details: [] })))”] [store_id=152]

2023-03-20 09:36:54 (UTC+08:00)

TiFlash 192.168.0.104:3930

[raft_client.rs:858] [“connection abort”] [addr=192.168.0.106:20170] [store_id=152]

2023-03-20 09:36:59 (UTC+08:00)

TiFlash 192.168.0.105:3930

[raft_client.rs:821] [“wait connect timeout”] [addr=192.168.0.106:20170] [store_id=152]

2023-03-20 09:36:59 (UTC+08:00)

TiFlash 192.168.0.104:3930

[raft_client.rs:821] [“wait connect timeout”] [addr=192.168.0.106:20170] [store_id=152]

2023-03-20 09:37:04 (UTC+08:00)

TiFlash 192.168.0.105:3930

[raft_client.rs:821] [“wait connect timeout”] [addr=192.168.0.106:20170] [store_id=152]

2023-03-20 09:37:04 (UTC+08:00)

TiFlash 192.168.0.104:3930

[raft_client.rs:821] [“wait connect timeout”] [addr=192.168.0.106:20170] [store_id=152]