1.根据 tidb 日志有报错

[2019/09/02 11:06:24.867 +08:00] [ERROR] [session.go:1025] [“active transaction fail”] [error=“[tikv:9001]PD server timeout[try again later]”] [errorVerbose="[tikv:9001]PD server timeout[try again later]

2.然后查看 pd 节点日志

2019/09/02 11:06:32.833 log.go:84: [warning] etcdserver: [server is likely overloaded]

2019/09/02 11:06:33.805 log.go:84: [warning] rafthttp: [closed an existing TCP streaming connection with peer 1271ec2a36ae23ca (stream Message writer)]

2019/09/02 11:06:33.805 log.go:88: [info] rafthttp: [established a TCP streaming connection with peer 1271ec2a36ae23ca (stream Message writer)]

2019/09/02 11:06:33.807 log.go:84: [warning] rafthttp: [closed an existing TCP streaming connection with peer 1271ec2a36ae23ca (stream MsgApp v2 writer)]

2019/09/02 11:06:33.807 log.go:88: [info] rafthttp: [established a TCP streaming connection with peer 1271ec2a36ae23ca (stream MsgApp v2 writer)]

2019/09/02 11:06:33.956 log.go:84: [warning] etcdserver: [read-only range request “key:”/tidb/store/gcworker/saved_safe_point" " took too long (4.270724089s) to execute]

2019/09/02 11:06:33.960 util.go:214: [warning] txn runs too slow, resp: &{cluster_id:5826383677316915514 member_id:8230488343191936483 revision:4003011 raft_term:253 true [response_put:<header:<revision:4003011 > > ]}, err: , cost: 3.241257913s

2019/09/02 11:06:33.961 tso.go:137: [warning] clock offset: 3.241515268s, prev: 2019-09-02 11:06:30.719518173 +0800 CST m=+2080575.748806204, now: 2019-09-02 11:06:33.961033441 +0800 CST m=+2080578.990321518

2019/09/02 11:06:40.337 log.go:84: [warning] rafthttp: [the clock difference against peer 1271ec2a36ae23ca is too high [59.933040521s > 1s]]

2019/09/02 11:07:01.471 tso.go:137: [warning] clock offset: 501.587848ms, prev: 2019-09-02 11:07:00.969478327 +0800 CST m=+2080605.998766381, now: 2019-09-02 11:07:01.471066175 +0800 CST m=+2080606.500354199

2019/09/02 11:07:10.338 log.go:84: [warning] rafthttp: [the clock difference against peer 1271ec2a36ae23ca is too high [59.932763341s > 1s]]

2019/09/02 11:07:32.566 log.go:84: [warning] wal: [sync duration of 1.596602305s, expected less than 1s]

2019/09/02 11:07:32.566 log.go:84: [warning] etcdserver: [failed to send out heartbeat on time (exceeded the 500ms timeout for 610.615821ms)]

2019/09/02 11:07:32.566 log.go:84: [warning] etcdserver: [server is likely overloaded]

2019/09/02 11:07:33.995 cluster_info.go:495: [info] [region 2567] Leader changed from store {1} to {6}

2019/09/02 11:07:34.957 log.go:84: [warning] rafthttp: [lost the TCP streaming connection with peer 1271ec2a36ae23ca (stream Message reader)]

2019/09/02 11:07:34.957 log.go:82: [error] rafthttp: [failed to read 1271ec2a36ae23ca on stream Message (read tcp 10.50.70.16:49744->10.50.70.17:2380: i/o timeout)]

PD 日志中有几个问题

1.服务器时间有差异,建议修改

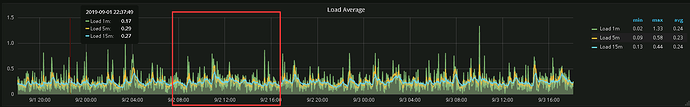

2.服务器超载以及 io timeout;建议查看下 PD 所在机器的负载情况,比如 CPU 等信息,除此之外,PD 机器上是否存在其他进程,例如 prometheus,目前日志来看是 PD 负载过高导致 tidb 去取 tso 超时。