sseacher

(sseacher)

1

为提高效率,提问时请提供以下信息,问题描述清晰可优先响应。

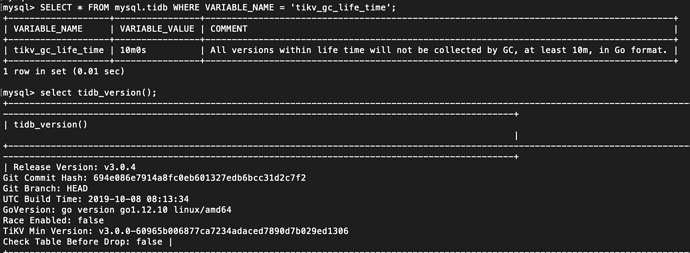

- 【TiDB 版本】:3.0.4

- 【问题描述】:使用mydumper从tidb全量备份数据,准备导入mysql,-t 1 -F 32的情况下还是会有oom,网上查说版本升级到新版本就行,但是我的都是新版本,目前设置tidb.yml的如下:

oom-action: "cancel"

# Set the memory quota for a query in bytes. Default: 32GB

# set to 128MB

mem-quota-query: 134217728

./tidb-tools/bin/mydumper -V

mydumper 0.9.5 (ba3946edc087e87bba7cb2728bc2407f6dbd0a8b), built against MySQL 5.7.24

[2019/11/28 14:34:39.824 +08:00] [WARN] [conn.go:668] ["dispatch error"] [conn=11021] [connInfo="id:11021, addr: status:3, collation:latin1_swedish_ci, user:user"]

[sql="SELECT /*!40001 SQL_NO_CACHE */ * FROM `online_db`.`table2`"]

[err="Out Of Memory Quota![conn_id=11021]

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleTask.func1

/home/jenkins/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:638

runtime.gopanic

/usr/local/go/src/runtime/panic.go:522

github.com/pingcap/tidb/util/memory.(*PanicOnExceed).Action

/home/jenkins/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/util/memory/action.go:91

github.com/pingcap/tidb/util/memory.(*Tracker).Consume

/home/jenkins/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/util/memory/tracker.go:152

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).sendToRespCh

/home/jenkins/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:571

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleCopResponse

/home/jenkins/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:846

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleTaskOnce

/home/jenkins/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:702

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).handleTask

/home/jenkins/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:645

github.com/pingcap/tidb/store/tikv.(*copIteratorWorker).run

/home/jenkins/workspace/release_tidb_3.0/go/src/github.com/pingcap/tidb/store/tikv/coprocessor.go:475

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1337"]

备份 tidb 数据时,使用的完整 mydumper 命令帮忙提供下吧

sseacher

(sseacher)

3

./tidb-tools/bin/mydumper -h xxx -P xxx -u user -p ‘xxx’ -t 1 -F 64 -B online_db -T table1,table2,table3 --skip-tz-utc -o ./data

QBin

(Bin)

4

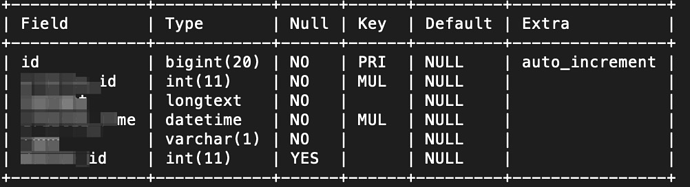

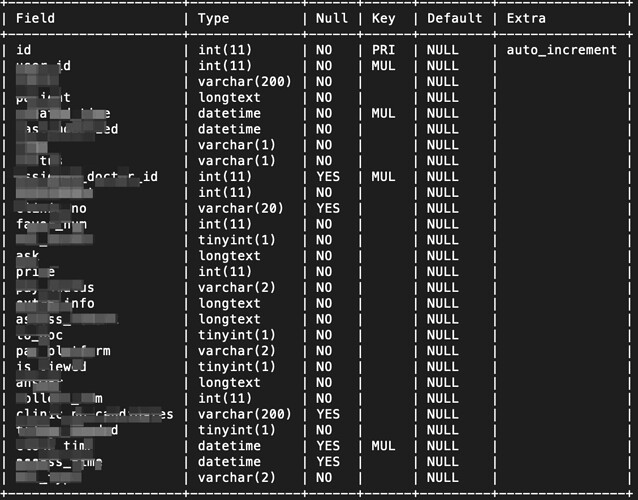

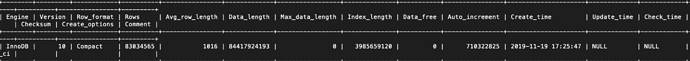

请问报错中的表有多大, mem-quota-query 设置的 128M 是有点小。

sseacher

(sseacher)

5

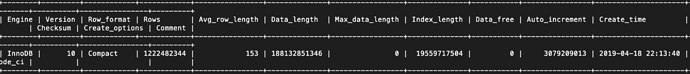

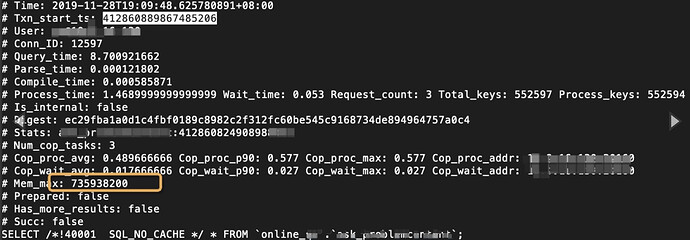

看了下slow_query,对应的Mem_max大出来好多,就像-F参数没有效一样。

# Time: 2019-11-28T14:34:35.292752162+08:00

# Txn_start_ts: 412856579394371599

# User:

# Conn_ID: 11022

# Query_time: 10.064219582

# Parse_time: 0.000039352

# Compile_time: 0.003376505

# Process_time: 1.552 Wait_time: 0.002 Request_count: 3 Total_keys: 244786 Process_keys: 244783

# Is_internal: false

# Digest: 556ae3df6523155c5e3c2d6d233f87b76e7c1d22d74fab05bf87918bd1aa2100

# Stats: table1:412856578109865991

# Num_cop_tasks: 3

# Cop_proc_avg: 0.517333333 Cop_proc_p90: 0.632 Cop_proc_max: 0.632 Cop_proc_addr: xxxx

# Cop_wait_avg: 0.000666666 Cop_wait_p90: 0.002 Cop_wait_max: 0.002 Cop_wait_addr: xxxx

# Mem_max: **1339255668** 【比配置的也大了好多,感觉-F参数失效了一样?】

# Prepared: false

# Has_more_results: false

# Succ: false

SELECT /*!40001 SQL_NO_CACHE */ * FROM xxx

对应的tidb.log里面因为配置的128M的mem-quota-query,所以先结束了,request_count也比slow_query里面的少,也就是查询继续执行了,直到mem_max到了1G+才被kill,Succ=False

[2019/11/28 14:34:27.836 +08:00] [WARN] [expensivequery.go:160] [expensive_query] [cost_time=2.5652049249999997s]

[process_time=0.92s] [wait_time=0.92s] [request_count=2] [total_keys=154618] [process_keys=154616] [num_cop_tasks=2]

[process_avg_time=0.46s] [process_p90_time=0.471s] [process_max_time=0.471s] [process_max_addr=xxx] [wait_avg_time=0.001s] [wait_p90_time=0.002s] [wait_max_time=0.002s] [wait_max_addr=xxx] [stats=xxx]

[conn_id=11022] [user=user] [table_ids="[39079]"]

[txn_start_ts=**412856579394371599**]

[mem_max="133.18398761749268 MB"]

[sql="SELECT /*!40001 SQL_NO_CACHE */ * FROM xxx"]

sseacher

(sseacher)

6

报错的表挺大的,都是几千万上亿的数据,mem-quota-query的设置主要是为了防止线上访问的异常内存占用

sseacher

(sseacher)

11

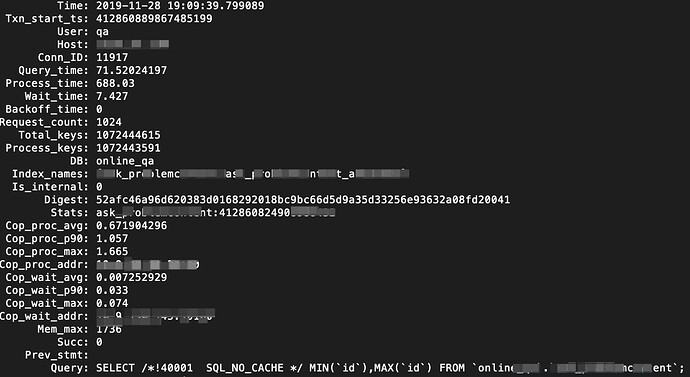

table1:

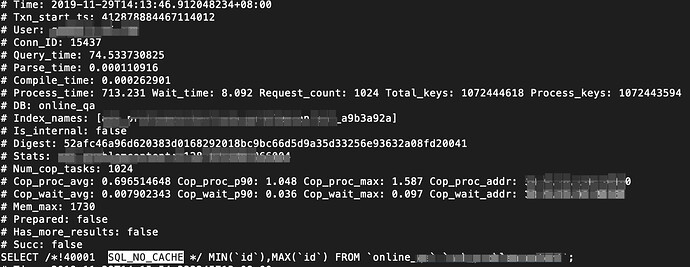

这个改用-r后,会看到有查询是获取了最大id和最小id,但是后续的查询并没有用id做range查询

./tidb-tools/bin/mydumper -h xxx -P xxx -u xxx -p xxx-t 1 -r 2000 -B online_db -T table1 --skip-tz-utc -o ./online_db

^[[D

** (mydumper:42504): CRITICAL **: 20:55:39.131: Could not read data from online_db.table1: Out Of Memory Quota![conn_id=12597]

table2:

这个改用-r后正常,对应的查询语句会用id进行range查询

sseacher

(sseacher)

13

好的,辛苦了,另外说明一下,我们最早是2.0 GA -> 2.1.9,然后再升的3.0.4;还有个问题就是-r出来的小文件数可能会太多,会不会文件数操过限制,看文件名结尾的数字位数也有限

QBin

(Bin)

15

./tidb-tools/bin/mydumper -h xxx -P xxx -u xxx -p xxx-t 1 -r 2000 -B online_db -T table1 --skip-tz-utc -o ./online_db -v 3 麻烦再执行一下,看下有问题的这表的输出是怎样的。

sseacher

(sseacher)

17

昨天没有注意到一点:取最大id和最小id的没有直接使用主键,而是使用了一个辅助索引;这个表昨天才做的analyze table

QBin

(Bin)

18

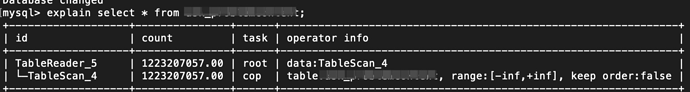

麻烦 explain select * from online_db 看下结果。

QBin

(Bin)

20

麻烦再给下 SELECT MIN(id),MAX(id) FROM online_db 的结果